Cody Coleman is the Founder and CEO of Coactive AI. He is also a co-creator of DAWNBench and MLPerf and a founding member of MLCommons. His work spans from performance benchmarking of hardware and software systems to computationally efficient methods for active learning and core-set selection. He holds a Ph.D. in Computer Science from Stanford University, where Professors Matei Zaharia and Peter Bailis advised him, and an MEng and BS from MIT.

What I Learned From Convergence 2022

Last week, I attended Comet ML’s Convergence virtual event. The event features presentations from data science and machine learning experts, who shared their best practices and insights on developing and implementing enterprise ML strategies. There were talks discussing emerging tools, approaches, and workflows that can help you effectively manage an ML project from start to finish.

In this blog recap, I will dissect content from the event’s technical talks, covering a wide range of topics from testing models in production and data quality assessment to operational ML and minimum viable model.

What I Learned From Attending Scale Transform 2021

A few weeks ago, I attended Transform, Scale AI’s first-ever conference that brought together an all-star line-up of the leading AI researchers and practitioners. The conference featured 19 sessions discussing the latest research breakthroughs and real-world impact across industries.

In this long-form blog recap, I will dissect content from the session talks that I found most useful from attending the conference. These talks cover everything from the future of ML frameworks and the importance of a data-centric mindset to AI applications at companies like Facebook and DoorDash. To be honest, the conference's quality was so amazing, and it’s hard to choose the talks to recap.

What I Learned From Attending RE•WORK Deep Learning 2.0 Summit 2021

At the end of January, I attended REWORK’s Deep Learning 2.0 Virtual Summit, which brings together the latest technological advancements and practical examples to apply deep learning to solve challenges in business and society. In this long-form blog recap, I will dissect content from the talks that I found most useful from attending the summit. The post consists of 17 talks that are divided into 5 sections: Enterprise AI, Ethics and Social Responsibility, Deep Learning Landscape, Generative Models, and Reinforcement Learning.

Bayesian Meta-Learning Is All You Need

Recommendation System Series Part 7: The 3 Variants of Boltzmann Machines for Collaborative Filtering

In this post and those to follow, I will be walking through the creation and training of recommendation systems, as I am currently working on this topic for my Master Thesis. Part 7 explores the use of Boltzmann Machines for collaborative filtering. More specifically, I will dissect three principled papers that incorporate Boltzmann Machines into their recommendation architecture. But first, let’s walk through a primer on Boltzmann Machine and its variants.

Recommendation System Series Part 6: The 6 Variants of Autoencoders for Collaborative Filtering

In this post and those to follow, I will be walking through the creation and training of recommendation systems, as I am currently working on this topic for my Master Thesis. In Part 6, I explore the use of Auto-Encoders for collaborative filtering. More specifically, I will dissect six principled papers that incorporate Auto-Encoders into their recommendation architecture.

Datacast Episode 34: Deep Learning Generalization, Representation, and Abstraction with Ari Morcos

Ari Morcos is a Research Scientist at Facebook AI Research working on understanding the mechanisms underlying neural network computation and function and using these insights to build machine learning systems more intelligently. In particular, Ari has worked on a variety of topics, including understanding the lottery ticket hypothesis, the mechanisms underlying common regularizers, and the properties predictive of generalization, as well as methods to compare representations across networks, the role of single units in computation, and on strategies to measure abstraction in neural network representations.

Previously, he worked at DeepMind in London, and earned his Ph.D. in Neurobiology at Harvard University, using machine learning to study the cortical dynamics underlying evidence accumulation for decision-making.

Datacast Episode 33: Domain Randomization in Robotics with Josh Tobin

Josh Tobin is the founder and CEO of a stealth machine learning startup. Previously, Josh worked as a deep learning & robotics researcher at OpenAI and as a management consultant at McKinsey. He is also the creator of Full Stack Deep Learning, the first course focused on the emerging engineering discipline of production machine learning. Josh did his Ph.D. in Computer Science at UC Berkeley, advised by Pieter Abbeel.

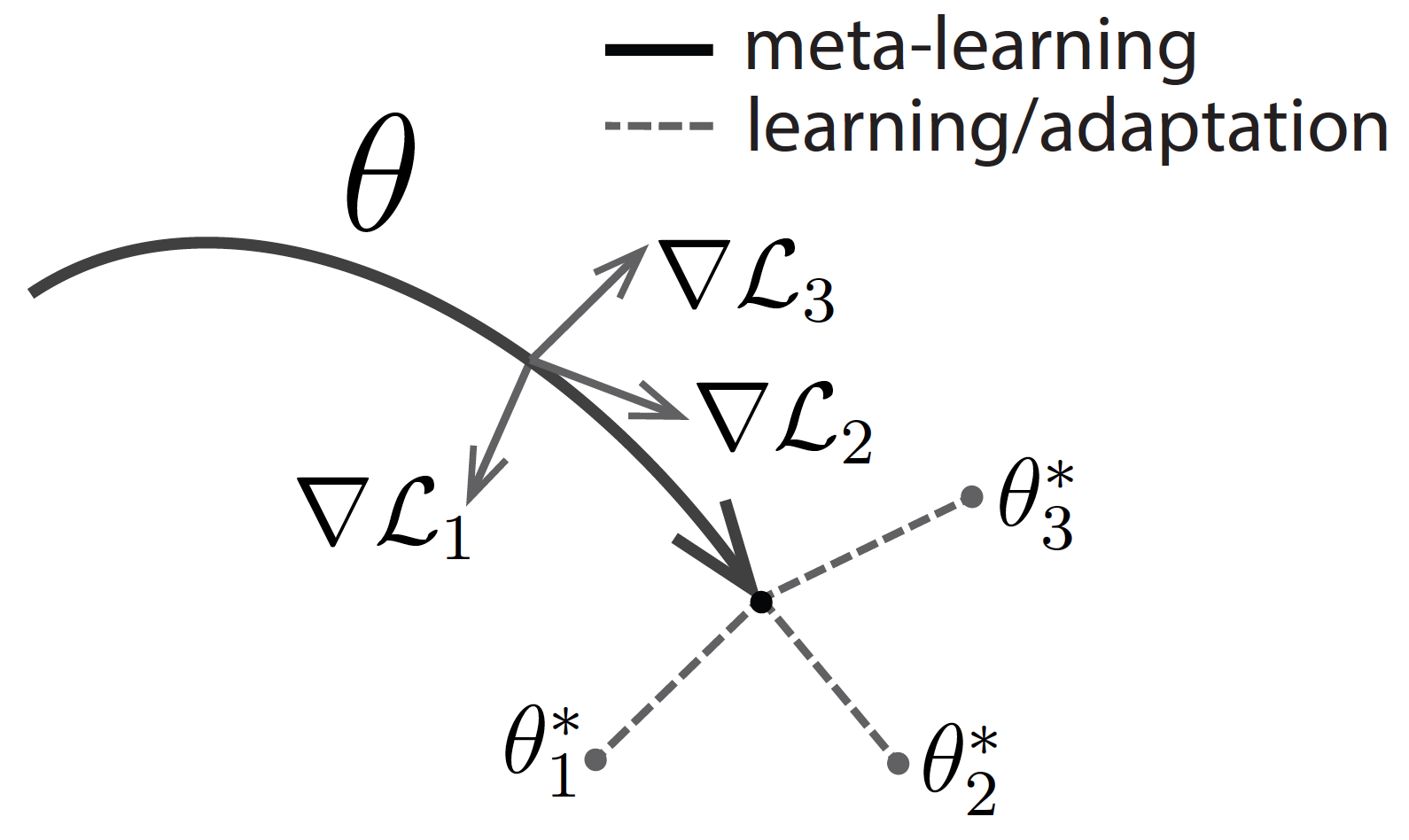

Meta-Learning Is All You Need

Meta-learning, also known as learning how to learn, has recently emerged as a potential learning paradigm that can learn information from one task and generalize that information to unseen tasks proficiently. During this quarantine time, I started watching lectures on Stanford’s CS 330 class on Deep Multi-Task and Meta Learning taught by the brilliant Chelsea Finn. As a courtesy of her lectures, this blog post attempts to answer these key questions:

Why do we need meta-learning?

How does the math of meta-learning work?

What are the different approaches to design a meta-learning algorithm?