Frank Liu is the Director of Operations at Zilliz with nearly a decade of industry experience in machine learning and hardware engineering. Prior to joining Zilliz, Frank co-founded an IoT startup based in Shanghai and worked as an ML Software Engineer at Yahoo in San Francisco. He presents at major industry events such as Open Source Summit and writes tech content for leading publications such as Towards Data Science and DZone. Frank holds MS and BS degrees in Electrical Engineering from Stanford University.

Datacast Episode 80: Creating The Sense of Sight with Alberto Rizzoli

Alberto Rizzoli is co-Founder of V7, a platform for deep learning teams to manage training data workflows and create image recognition AI. V7 is used by AI-first companies and enterprises, including Honeywell, Merck, General Electrics, and MIT.

Alberto founded his first startup at age 19 and made the MakerFaire’s 20 under 20 list. In 2015, he began working on AI with Simon Edwardson while studying under Ray Kurzweil, leading to the creation of the first engine capable of running large deep neural networks on smartphone CPUs. Later, this project became Aipoly, a startup that helped the blind identify over 3 billion objects to date using their phones.

Alberto's work on AI granted him an award and personal audience by Italian President Sergio Mattarella and Italy’s Premio Gentile for Science and Innovation. V7's underlying technology won the CES Best of Innovation in 2017 and 2018.

Datacast Episode 61: Meta Reinforcement Learning with Louis Kirsch

Louis Kirsch is a third-year Ph.D. student at the Swiss AI Lab IDSIA, advised by Prof. Jürgen Schmidhuber. He received his B.Sc. in IT-Systems-Engineering from Hasso-Plattner-Institute (1st rank) and his Master of Research in Computational Statistics and Machine Learning from University College London (1st rank). His research focuses on meta-learning algorithms for reinforcement learning, specifically meta-learning algorithms that are general-purpose, introduced by his work on MetaGenRL. Louis has organized the BeTR-RL workshop at ICLR 2020, was an invited speaker at Meta Learn NeurIPS 2020, and won several GPU compute awards for the Swiss national supercomputer Piz Daint.

Datacast Episode 56: Apprehending Quantum Computation with Alba Cervera-Lierta

Alba Cervera-Lierta is a postdoctoral researcher at the Alán Aspuru-Guzik group at the University of Toronto. She obtained her Ph.D. at the University of Barcelona in 2019. Her background is in particle physics and quantum information theory. She has focused on quantum computation algorithms in the last years, particularly those suited for noisy-intermediate scale quantum computation.

Datacast Episode 45: Teaching Artificial Intelligence with Amita Kapoor

Amita Kapoor is an Associate Professor in a college at the University of Delhi. She has 20+ years of teaching experience. She is the co-author of various best-selling books in the field of Artificial Intelligence and Deep Learning. A DAAD fellow, she has won many accolades, with the most recent Intel AI Spotlight award 2019 in Europe. As an active researcher, she has more than 50 publications in international journals and conferences. She is extremely passionate about using AI for the betterment of society and humanity in general.

Recommendation System Series Part 7: The 3 Variants of Boltzmann Machines for Collaborative Filtering

In this post and those to follow, I will be walking through the creation and training of recommendation systems, as I am currently working on this topic for my Master Thesis. Part 7 explores the use of Boltzmann Machines for collaborative filtering. More specifically, I will dissect three principled papers that incorporate Boltzmann Machines into their recommendation architecture. But first, let’s walk through a primer on Boltzmann Machine and its variants.

Datacast Episode 34: Deep Learning Generalization, Representation, and Abstraction with Ari Morcos

Ari Morcos is a Research Scientist at Facebook AI Research working on understanding the mechanisms underlying neural network computation and function and using these insights to build machine learning systems more intelligently. In particular, Ari has worked on a variety of topics, including understanding the lottery ticket hypothesis, the mechanisms underlying common regularizers, and the properties predictive of generalization, as well as methods to compare representations across networks, the role of single units in computation, and on strategies to measure abstraction in neural network representations.

Previously, he worked at DeepMind in London, and earned his Ph.D. in Neurobiology at Harvard University, using machine learning to study the cortical dynamics underlying evidence accumulation for decision-making.

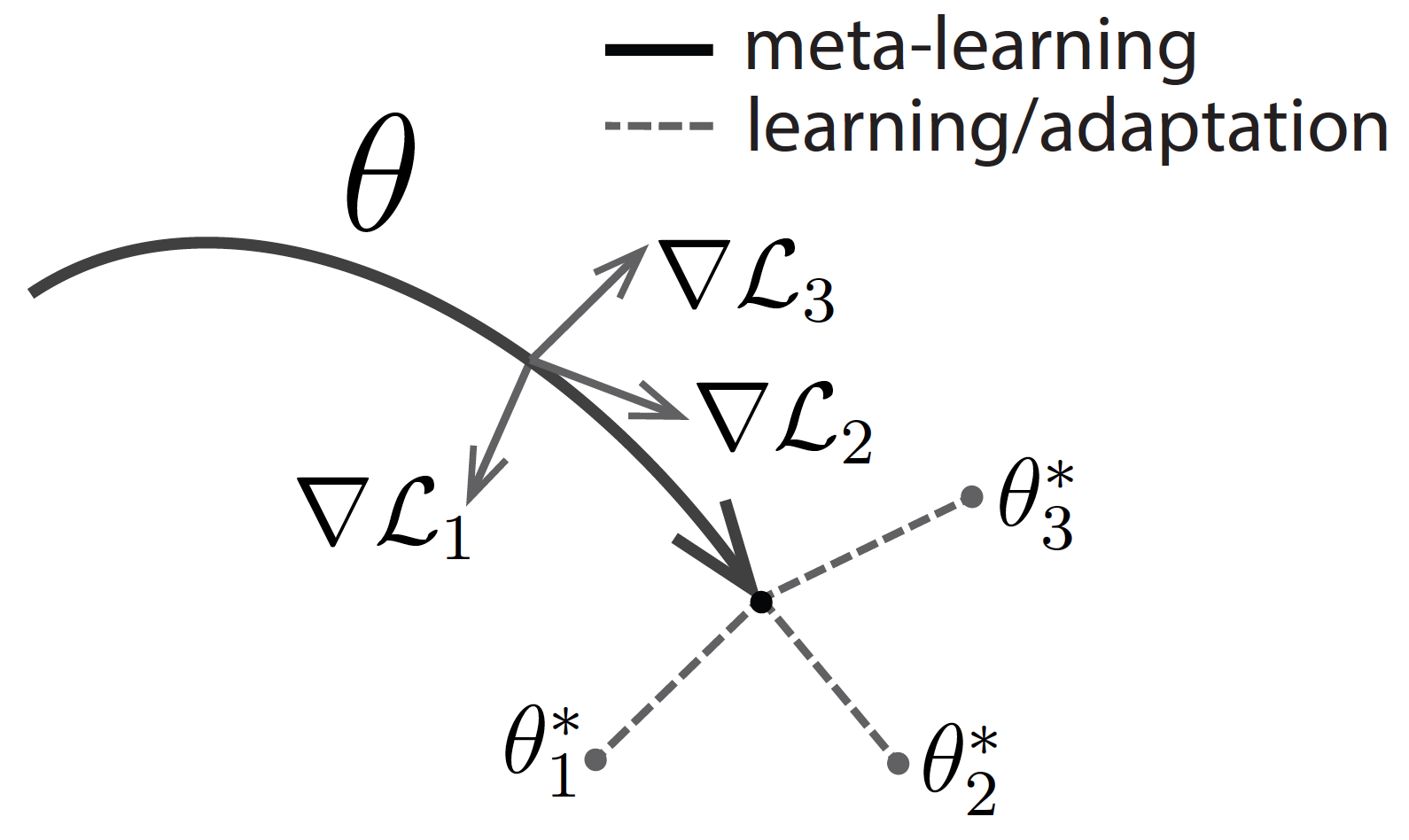

Meta-Learning Is All You Need

Meta-learning, also known as learning how to learn, has recently emerged as a potential learning paradigm that can learn information from one task and generalize that information to unseen tasks proficiently. During this quarantine time, I started watching lectures on Stanford’s CS 330 class on Deep Multi-Task and Meta Learning taught by the brilliant Chelsea Finn. As a courtesy of her lectures, this blog post attempts to answer these key questions:

Why do we need meta-learning?

How does the math of meta-learning work?

What are the different approaches to design a meta-learning algorithm?