Louis Kirsch is a third-year Ph.D. student at the Swiss AI Lab IDSIA, advised by Prof. Jürgen Schmidhuber. He received his B.Sc. in IT-Systems-Engineering from Hasso-Plattner-Institute (1st rank) and his Master of Research in Computational Statistics and Machine Learning from University College London (1st rank). His research focuses on meta-learning algorithms for reinforcement learning, specifically meta-learning algorithms that are general-purpose, introduced by his work on MetaGenRL. Louis has organized the BeTR-RL workshop at ICLR 2020, was an invited speaker at Meta Learn NeurIPS 2020, and won several GPU compute awards for the Swiss national supercomputer Piz Daint.

Unsupervised Meta-Learning Is All You Need

Bayesian Meta-Learning Is All You Need

Meta-Learning Is All You Need

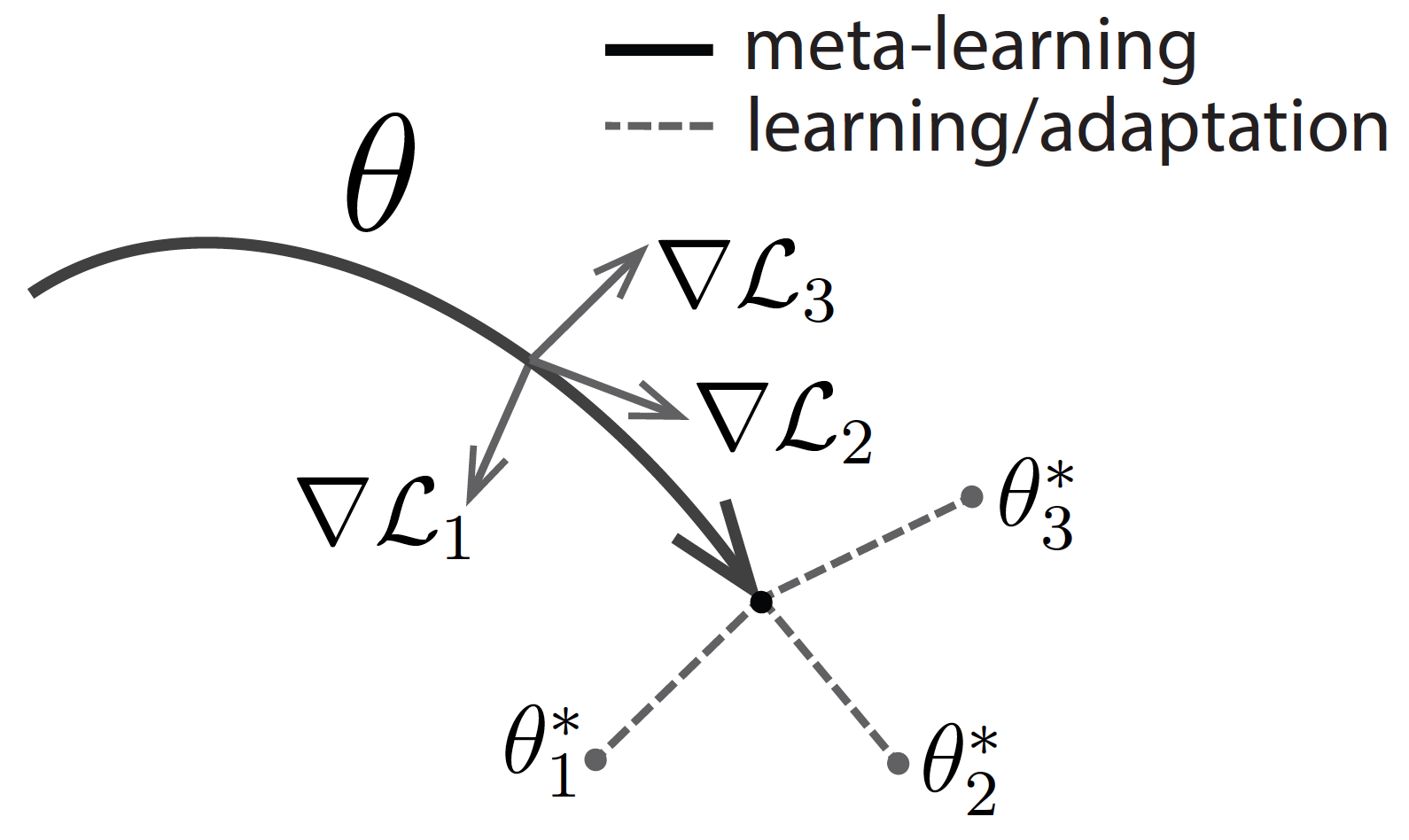

Meta-learning, also known as learning how to learn, has recently emerged as a potential learning paradigm that can learn information from one task and generalize that information to unseen tasks proficiently. During this quarantine time, I started watching lectures on Stanford’s CS 330 class on Deep Multi-Task and Meta Learning taught by the brilliant Chelsea Finn. As a courtesy of her lectures, this blog post attempts to answer these key questions:

Why do we need meta-learning?

How does the math of meta-learning work?

What are the different approaches to design a meta-learning algorithm?