In this post and those to follow, I will be walking through the creation and training of recommendation systems, as I am currently working on this topic for my Master Thesis. In Part 6, I explore the use of Auto-Encoders for collaborative filtering. More specifically, I will dissect six principled papers that incorporate Auto-Encoders into their recommendation architecture.

Datacast Episode 35: Data Science For Food Discovery with Ankit Jain

Ankit Jain is a senior research scientist at Uber AI Labs, the machine learning research arm of Uber. His work primarily involves the application of deep learning methods to a variety of Uber’s problems ranging from food delivery, fraud detection to self-driving cars. Previously, he worked in a variety of machine learning roles at Facebook, Bank Of America, and other startups. He co-authored a book on machine learning titled TensorFlow Machine Learning Projects. Additionally, he’s been a featured speaker in many of the top AI conferences and universities and has published papers in several top conferences like NeurIPs and ICLR. He earned his MS from UC Berkeley and BS from IIT Bombay.

Datacast Episode 34: Deep Learning Generalization, Representation, and Abstraction with Ari Morcos

Ari Morcos is a Research Scientist at Facebook AI Research working on understanding the mechanisms underlying neural network computation and function and using these insights to build machine learning systems more intelligently. In particular, Ari has worked on a variety of topics, including understanding the lottery ticket hypothesis, the mechanisms underlying common regularizers, and the properties predictive of generalization, as well as methods to compare representations across networks, the role of single units in computation, and on strategies to measure abstraction in neural network representations.

Previously, he worked at DeepMind in London, and earned his Ph.D. in Neurobiology at Harvard University, using machine learning to study the cortical dynamics underlying evidence accumulation for decision-making.

Datacast Episode 33: Domain Randomization in Robotics with Josh Tobin

Josh Tobin is the founder and CEO of a stealth machine learning startup. Previously, Josh worked as a deep learning & robotics researcher at OpenAI and as a management consultant at McKinsey. He is also the creator of Full Stack Deep Learning, the first course focused on the emerging engineering discipline of production machine learning. Josh did his Ph.D. in Computer Science at UC Berkeley, advised by Pieter Abbeel.

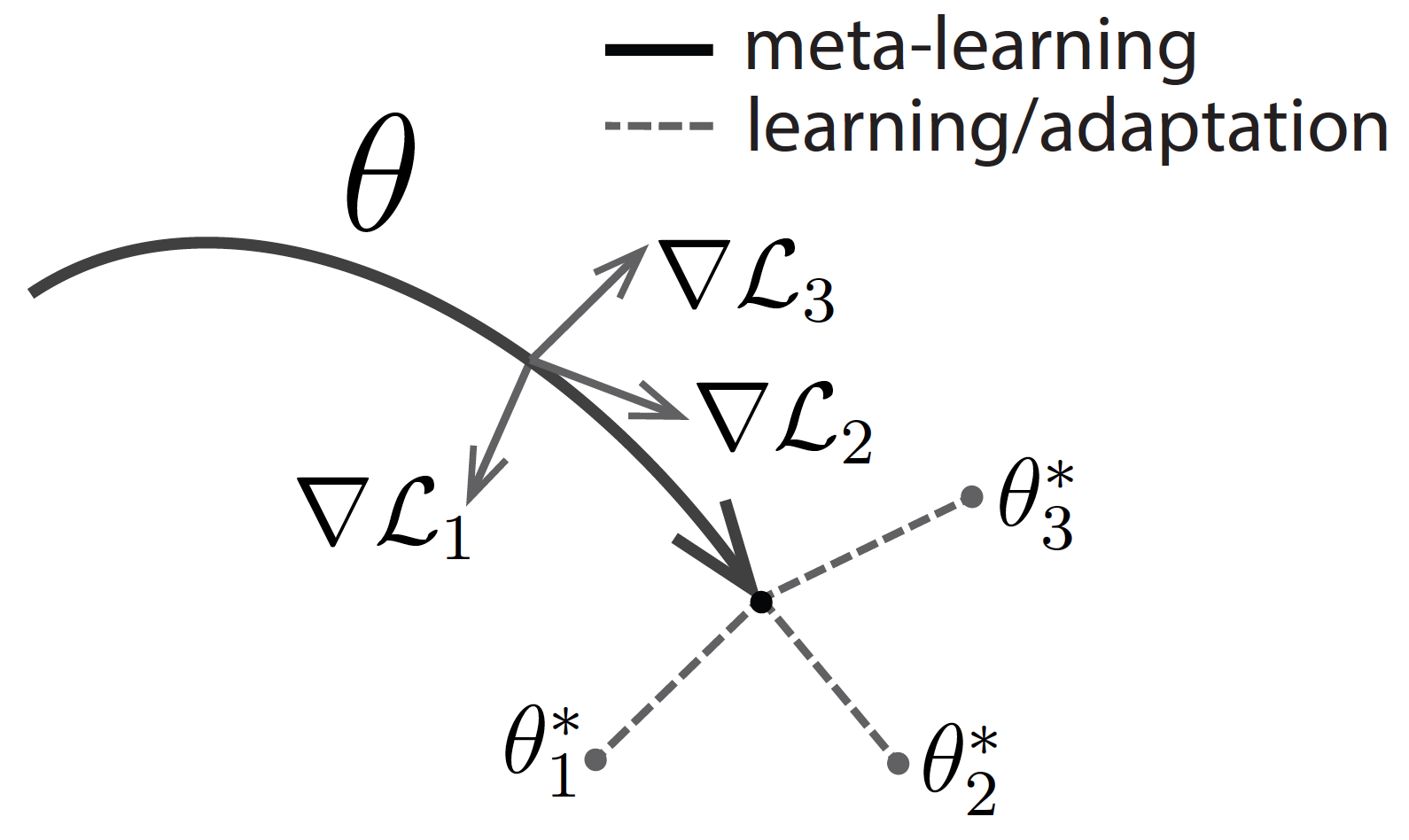

Meta-Learning Is All You Need

Meta-learning, also known as learning how to learn, has recently emerged as a potential learning paradigm that can learn information from one task and generalize that information to unseen tasks proficiently. During this quarantine time, I started watching lectures on Stanford’s CS 330 class on Deep Multi-Task and Meta Learning taught by the brilliant Chelsea Finn. As a courtesy of her lectures, this blog post attempts to answer these key questions:

Why do we need meta-learning?

How does the math of meta-learning work?

What are the different approaches to design a meta-learning algorithm?

Datacast Episode 32: Economics, Data For Good, and AI Research with Sara Hooker

Sara Hooker is a researcher at Google Brain doing deep learning research on reliable explanations of model predictions for black-box models. Her main research interests gravitate towards interpretability, model compression, and security. In 2014, she founded Delta Analytics, a non-profit dedicated to bringing technical capacity to help non-profits across the world use machine learning for good. She grew up in Africa, in Mozambique, Lesotho, Swaziland, South Africa, and Kenya. Her family now lives in Monrovia, Liberia.

Recommendation System Series Part 5: The 5 Variants of Multi-Layer Perceptron for Collaborative Filtering

Datacast Episode 31: From Quantum Computing to Epidemic Modeling with Colleen Farrelly

Colleen M. Farrelly is a data scientist whose experience spans biotech, healthcare, pharma, marketing, finance, operations, edtech, manufacturing, and disaster logistics. Her research focuses mostly on the intersection of topology/geometry, machine learning, statistics, and quantum computing. She’s passionate about poetry, surfing, Gators football, and socioeconomics.

Datacast Episode 30: Data Science Evangelism with Parul Pandey

Parul Pandey is a Data Science Evangelist at H2O.ai. She combines Data Science, evangelism and community in her work. Her emphasis is to break down the data science jargon for the people. Prior to H2O.ai, she worked with Tata Power India, applying Machine Learning and Analytics to solve the pressing problem of load sheddings in India. She is also an active writer and speaker and has contributed to various national and international publications including Towards Data Science, Analytics Vidhya, and KDNuggets and Datacamp.

Datacast Episode 29: From Bioinformatics to Natural Language Processing with Leonard Apeltsin

Dr. Leonard Apeltsin is a research fellow at the Berkeley Institute for Data Science. He holds a Ph.D. in Biomedical Informatics from UCSF and a BS in Biology and Computer Science from Carnegie Mellon University. Leonard was a Senior Data Scientist & Engineering Lead at Primer AI, a machine learning company that specializes in using advanced Natural Language Processing Techniques to analyze terabytes of unstructured text data. As a founding team-member, Leonard helped expand the Primer AI team from four employees to over 80 people. Outside of Data Science and ML, Leonard enjoys scuba diving, salsa dancing, and making short documentary films.