Last month, I had the opportunity to speak at Arize:Observe, the first conference dedicated solely to ML observability from both a business and technical perspective. More than a mere user conference, Arize:Observe features presentations and panels from industry thought leaders and ML teams across sectors. Designed to tackle both the basics and most challenging questions and use cases, the conference has sessions about performance monitoring and troubleshooting, data quality and drift monitoring and troubleshooting, ML observability in the world of unstructured data, explainability, business impact analysis, operationalizing ethical AI, and more.

In this blog recap, I will dissect content from the summit’s most insightful technical talks, covering a wide range of topics from scaling real-time ML and best practices of effective ML teams to challenges in monitoring production ML pipelines and redesigning ML platform.

Note: You should also check out this article from the Arize team on the key takeaways from the fireside chats of the summit.

1 - Keynote

Observability is arguably the hottest area of machine learning today. The massive investments companies have put toward digital transformation and building data-centric businesses in the last decade are manifesting as machine learning models in production – yet there’s a gap in the infrastructure required to maintain and improve these models once they are deployed into the real world. In their keynote presentation, Jason Lopatecki and Aparna Dhinakaran explored the state of the ML infrastructure ecosystem, key considerations when building an ML observability practice that can deliver tangible ROI across your organization, and what’s on the horizon of Arize’s product roadmap.

Over the last five years in the ML world, most of that time has been spent focusing on the stages before models make it into production (data preparation, model training, experiment tracking, and model deployment). There has not been too much focus on what happens once models hit production and impact the business. Teams spend, on average less than 1% of the time on the part after models are deployed into production. Because of that, they would never know when model issues crop up and find out about issues when their customers get impacted. Then, it can take them weeks to troubleshoot and figure out the root cause. These business challenges have common underlying technical problems such as model/data drift, performance degradation, model interpretability, data quality issues, model readiness, and fairness/bias issues.

In other words, companies are shipping AI blind. There’s a journey that a model has to go through before it even makes it into production:

It starts with a Jupyter Notebook where the data scientist builds the model.

Then the model has to be transformed (maybe rewritten into Python or Java code) to be production-ready. During this process, teams likely encounter data changes, broken scripts, different features in production, and data leakage.

Once the model is in production, you have to deal with feature changes, performance drifts, cohort regression, etc. Teams aren’t well-equipped to troubleshoot these model issues. The best they had was manual troubleshooting scripts.

Here are some statistics from Arize’s survey of 1,000+ companies working in this space (conducted earlier this year):

84% take >1 week to detect and fix a model issue.

51% want deeper capabilities to monitor drift and conduct root cause analysis.

54% and 52% say business execs can’t quantify AI ROI and simply don’t understand ML, respectively.

There are two ways that teams have gone out to tackle AI and ML in their businesses:

The first-generation citizen data scientist approach is to build a single platform that does everything. This low-code/no-code approach helps turn business analysts into data scientists and gives them the power to build models.

The second way is to build a data science specialty to compete with competitors within the business. This entails using modular software tools to fix and improve MLOps workflows based on the business needs. Similar to the software engineering space, the ML engineering space constitutes modular tools and solutions for different problems such as processing and augmenting data for use by models, building the model based on the wealth of goals, and integrating model predictions into the business.

To solve the last mile of handling model predictions and operational decisions, companies have been duct taping various tools to trace performance, explain model predictions, visualize the data, monitor and calculate metrics, write orchestration jobs, and store model inferences. ML observability combines all of these tools in a single place - a software that helps teams automatically monitor AI, understand how to fix it when it’s broken, and improve data and models.

In infrastructure and systems, logs, metrics, and tracing are crucial to achieving observability. These components are also critical to achieving ML observability, which is the practice of obtaining a deep understanding of your model’s data and performance across its lifecycle:

Inference Store – Records of ML prediction events that were logged from the model. These raw prediction events hold granular information about the model’s predictions.

Model Metrics – Calculated metrics on the prediction events to determine overall model health over time, including drift, performance, and data quality metrics. These metrics can then be monitored.

ML Performance Tracing – While logs and metrics might be adequate for understanding individual events or aggregate metrics, they rarely provide helpful information when debugging model performance. You need another observability technique called ML performance tracing to troubleshoot model performance.

Before ML Obseravability, ML teams only found out about ML issues when the business or the customers found out. Data scientists and ML engineers would spend weeks writing queries and using ad-hoc tools to figure out the root cause of that performance issue (without any structured workflow). With ML Observability, some metrics will alert them before a customer complains. There are troubleshooting workflows using tracing to drill down and root-cause (where this comes from, what segment is pulling down the model, etc.). As a result, ML teams can fix the data or the model and connect with the inference store to kick off the ML workflow. They feel more confident putting models into production, have the tools to root-cause issues, and have less havoc on the business when ML issues come up.

Arize has introduced a free self-serve version of their ML Observability platform, which enables users to detect root causes of issues and resolve model performance issues fast, regardless of the number of models deployed in production. With its integration via an SDK or file ingestion from major cloud storage providers, ML teams can begin monitoring and troubleshooting model performance in minutes. Check out their blog post on ML Performance Tracing for more details!

2 - Using Reinforcement Learning Techniques for Recommender Systems

Ever wonder how you can train algorithms to introduce fresh and new information while still making the recommendations targeted and personalized to the user? Claire Longo from Opendoor covered how and when to leverage reinforcement learning (RL) techniques for personalized recommendations problems in fields from e-commerce to click-through-rate optimization. These methods can solve common challenges encountered in recommender systems such as the cold start problem and the echo chamber effect.

Recommendation systems are used to drive personalization through AI. They have wide-ranging applications in e-commerce, newsfeed personalization, music recommendations, CTR optimization in marketing/advertising, and more. Traditional ML personalization algorithms depend on user and item data, including content-based filtering, collaborative filtering, and hybrid systems (factorization machines, Deep RecSys).

While ML algorithms find patterns in historical data, RL algorithms learn by trial and error. RL entails a set of live learning techniques that define an environment with agents that can take action to optimize the outcome. RL deals with an interesting phenomenon called the explore/exploit tradeoff: The agent can learn by trial and error. It will randomly explore new actions and their outcomes while still seeking to primarily follow the optimal policy it has learned thus far.

Claire then framed personalization as an RL problem as seen above, where the agent is the decision-making mechanism, the action is an item recommendation, the reward is the thing we are trying to optimize with the recommendations (click, purchase, etc.), and the environment is the world defined by the user and item data.

Some of the top RL algorithms to look at are contextual multi-armed bandits (the simplest RL algorithm that leverages both user and item data), value-based methods (which determine best actions by estimating the long-term value of the actions), policy-based methods (which optimize the value of a policy), and model-based methods (which use ML to model the environment).

Claire then brought up the three significant challenges associated with building a recommendation system:

The Cold-Start Problem: It occurs when new users enter the system. We are unable to make personalized recommendations because we don’t have any data. With an RL solution, we can use the explore/exploit trade-off to jump-start the algorithm. It will try a few options and adapt based on how the user responds.

The Echo Chamber Effect: It occurs when a personalization algorithm becomes almost too personalized. You are only being introduced to things that similar users like. This actually makes your world smaller, not bigger. With an RL solution, we can use the explore/exploit trade-off to give the user the opportunity to interact with new information they would not have otherwise discovered.

The Long-Tail Effect: There is more data for popular items, and thus personalization algorithms learn how to recommend these items better. These are accurate recommendations, but it’s arguable that the user could’ve easily discovered these items on their own without the aid of a personalization algorithm. With an RL solution, we can use the explore/exploit trade-off to introduce new items in front of the user and see how they respond to them. If they respond well, the algorithm will adapt and learn to personalize even better for that user.

To evaluate and monitor recommendation systems, Claire recommended looking at three types of metrics:

Mean Average Precision@k and Mean Average Recall@k are ideal for evaluating an ordered list of recommendations. They measure the precision and recall at the k-th recommendations. Both metrics can appear to be good for models that only work well on popular items.

Coverage is the percentage of items that the algorithm is able to recommend. This metric is useful for live monitoring to detect behavior changes of users.

Personalization measures dissimilarity between users’ lists of recommendations. This metric is useful as a unit test in your CI/CD pipeline to detect patterns between user cohorts.

To learn more about these topics, Claire recommended these resources: Mastering RL with Python, The Ethical Algorithm, DeepMind’s RL Lecture Series, and the Recmetrics repository (created by her).

3 - DoorDash’s ML Observability Journey

As one of the largest logistics companies in the U.S., DoorDash relies on its machine learning infrastructure to deploy models into production that ensure a consistent experience for customers. As such, applying observability best practices to its ML models at a system level is critical. Hien Luu and Nachiket Paranjape provided an overview of DoorDash’s ML platform. They covered why they invested in model observability, chose a model monitoring approach, and thought about ML model monitoring as a DevOps system.

Doordash’s mission is to grow and empower local economies. They accomplish that through a set of product offerings: Food Delivery and Pickup (customers can order food on demand), Convenience and Grocery (customers can order everything from non-food items to entire weeks of groceries), and DashPass (a subscription product similar to Amazon Prime).

DoorDash has various ML use cases across the food ordering process:

Creating order: When customers land on the DoorDash homepage, they want to surface the most compelling options. This is where the recommendation models play a big role. If the customer doesn’t see something they would like on the homepage and decide to search, this is where DoorDash leverages a set of search and ranking models to help with providing relevant search results.

Order checkout: Estimated Time Arrivals (ETAs) are essential for setting customer expectations about when the food will arrive; therefore, it is critical to have accurate ETAs (which are predicted based on a set of factors such as the size of a shopping car, the historical store operations, the number of dashers available at that point in time, etc.). DoorDash also uses a series of fraud detection models to identify fraudulent transactions.

Dispatching order: DoorDash’s core logistics engine sends orders to merchants and assigns orders to dashers. In order to do this effectively and efficiently, it relies on several ML models, such as predicting food preparation time and travel time. Unlike Amazon orders, food orders emerge on the fly, and food quality plays an important role in the delivery.

Delivering order: ML use cases in this step include routing, image classification, and more. During the pandemic, DoorDash introduced contactless delivery, so they asked dashers to drop off the food near the front door, take a photo of the food, and then send the photo to customers and DoorDash. This helps customers find their food easily. DoorDash uses a series of deep learning models to identify whether the drop-off photo is valid or not.

Once DoorDash had integrated ML systems into its products, a need for monitoring arose since data scientists wanted to know what decisions were taken (and why). The ML platform team consulted with the data science team to understand what would be the most helpful for them. Based on the feedback, the ML platform team quickly implemented the first version with MLOps principles, which generated good results and led to iterative improvements.

Why observability? Once an ML model is deployed to production, it’s susceptible to degradation, which negatively impacts the accuracy of DoorDash’s time estimates. Model predictions tend to deviate from the expected distribution over time. DoorDash can protect the downside and avoid bad outcomes by using observability and monitoring. For example, they migrated to a new feature upload system and experienced some issues in their downstream model prediction services. If they had monitoring at that time, they would have been able to detect these shifts sooner and fix their models. As they built out more mature systems, they realized a need to have systemic performance monitoring and uncovered other issues such as feature uploading.

Because ML models are derived from data patterns, their inputs and outputs need to be closely monitored to diagnose and prevent model drift and feature drift. Monitoring and testing should be present every step of the way, but Nachiket focused on major areas, including data quality, model training, model prediction, and general system performance. Additionally, their users like to understand why a model made some specific predictions (ad-hoc inquiries).

As seen in the table above, the ML platform team has laid out a high-level view and categorized the areas into two dimensions: observability and explainability.

For data quality, they are investing in a data quality service which includes adding safeguards to data inputs before they reach the training phase.

For training, prediction, and system areas, they have developed in-house tools and used industry standards such as Shapley plots.

For ad-hoc inquiries, they provide dashboards, notebooks, and a one-stop-shop ML Portal UI (where data scientists can have an overview of their ML applications).

In this presentation, Nachiket covered model training, model prediction, and system performance in detail. In order to make ML observability setup easier for implementation and adoption, the ML platform team has leveraged existing tools and technologies used at DoorDash: data ingestion pipelines with Snowflake, compute with Databricks, issue notification with Slack and PagerDuty, etc.

For model training:

They want to ensure that the inputs fed into the model are as expected. They verify that there are no excessive amounts of missing values and that the distributions of features are as expected. They also track their training runs with mlflow to uncover observability.

While setting up training instances, data scientists look at various properties of features (distributions, percentage of negative missing values) as seen above. They use internal build tools to achieve these reports on the quality of data in the training instances.

Another standard way of looking at the effect of features on the output is a game-theoretic approach called Shapley, which is accessible through their ML Portal UI.

Recently, they have enabled the data scientists to view feature importance plots for tree-based models (also exposed via the ML Portal UI).

For model prediction:

In their production environments, when they receive a prediction value to a Snowflake table, having all of the predictions logged in a structured way allows them to construct queries against these tables to derive statistics (average, percentiles, etc.).

They can also set up compute jobs to extract this information from these Snowflake tables and statistics at a regular cadence. This has allowed them to look at the historical performance of their features and their prediction scores.

Once a prediction is logged, they also want to monitor the outputs of these models (descriptive statistics, model’s AUC curves, etc.). These predicted values are monitored over time to ensure that there are no unexpected outliers.

For feature quality: They launched Fabricator, a declarative way for creating features, in Q4 2021. That has enabled their users to develop new feature pipelines at a breakneck pace. Features, by nature, undergo some degradation in time; hence they realized a need to monitor feature drift over time. Feature storage can get expensive. It is often important to monitor feature drift to avoid feature storage costs.

Initially, the ML Platform team set up Flink-based real-time jobs to track the prediction events, which go to Snowflake tables. This was not only cost-inefficient but also difficult to scale. After an iterative process of improving this setup and talking to data scientists, they decided to take a two-step approach for setting up monitoring on the feature quality.

They noticed that the defaulting features had the most adverse impact on their models; hence, feature defaulting had to be alerted almost immediately. Similarly, statistics such as mean, standard deviation, average values, and percentiles can be notified and monitored at a lower cadence.

Hence, they developed a two-tier approach of high-tier or critical alerts (where they monitor defaulting features) and low-tier or info alerts (where they monitor other stats). For low-tier alerts, data scientists can set up low-tier monitoring for different features to monitor their performance.

They publish all the metrics to Chronosphere. By setting thresholds, users can get notified directly of any misbehaving features.

For system performance:

As they went ahead in their journey of setting up model observability, a lot of data that was to be monitored came from systems and services on the ML platform. Hence, it was equally important to monitor the health of these systems to ensure the reliability of the ML observability setup.

They follow standard service reliability best practices and monitor requests, prediction counts, SLA availability, and latency. They have tight alerting on each of these components. Since other systems (such as delivery, ETA, or fraud) rely on their prediction values, changes in quality can significantly impact these sister teams.

Here are the four takeaways that DoorDash’s ML Platform team observed as benefits of developing their own production monitoring systems:

Leveraging existing tools: They could design a configurable and flexible platform for displaying metrics and setting up alerts by reusing these tools. This has helped them with the development and adoption of ML observability at DoorDash.

No onboarding required: Data scientists don’t need to individually write code to add monitoring to their training pipelines. They only need to add configuration files if necessary in some cases, but otherwise, none. They also do not have to think about the scalability and reliability of the monitoring solution.

Easy visualization: Graphing tool such as Chornosphere offers the ability to interactively view and split historical data. This is incredibly useful when it comes to finding correlations between events.

Self-serve: Data scientists can use this tool without the help of the platform team, which enables the platform team to have a more scalable solution for detecting model and feature drift going forward.

The talk ended with these four lessons learned:

Scaling out doesn’t always work: Sometimes, you have to think outside the box or get back to the drawing board. Scaling comes at a cost associated with it.

Opt-in vs. Opt-out: Both can work in different use cases. For example, all the models in production have, by default, enabled model monitoring metrics. However, they currently have gone ahead with an opt-in approach for feature monitoring. Providing an out-of-the-box solution is preferable in most cases, as automation is key for any large-scale system. If you have good foundational tools, you should use them by enabling an opt-in approach.

Leveraging existing tools can be beneficial.

Working closely with customers to understand their needs is necessary for a good outcome: Having a constant feedback loop with the data scientists has helped the platform team identify several edge cases and build a data scientist-friendly ML observability solution at DoorDash.

4 - Scaling Real-Time ML at Chime

Chime is a financial technology company founded on the premise that basic banking services should be helpful, easy, and free. Behind that promise is a sophisticated ML team that regularly deploys sophisticated models in production, including some that recently transitioned from batch to real-time data. Peeyush Agarwal and Akshay Jain – Chime’s ML Platform Team Leads – walked through a real-world example, showcasing what it took from a technical perspective to go real-time across the entire ML lifecycle.

Over 75% of Americans rely on their next paycheck to make ends meet. Chime’s mission is to help its members achieve financial peace of mind. In addition to a checking account and two-day early direct deposit, here are a few other Chime’s products:

Spot Me: Chime spots members up to $200 on debit card purchases and cash withdrawals with no overdraft fee.

Credit Builder is a secured credit card without annual fees or interest that helps members build a credit history.

Pay Friends allows members to pay and receive money from friends instantly.

High-Yield Savings helps members save and grow their money.

The mission of the Data Science and Machine Learning team at Chime is to deliver machine intelligence across all of Chime’s systems and products by eliminating engineering bottlenecks to individualize member experiences and better inform Chime’s agent operations. Chime has a lot of ML use cases: personalizing member experience, preventing fraudulent transactions / unauthorized logins / other risk-related events, and predicting member lifetime value/matching members to appropriate offers.

The table above shows how Chime approaches ML use cases:

Training and Batch Inference: The pipeline operates on batches of historical events (such as identifying each member’s lifetime value weekly or predicting when a member might stop using features). You need this approach when all the data to run the pipeline is available in the warehouse (can tolerate mins of latency) and in-the-moment information has limited use.

Real-Time Inference: You need to run low-latency inference (<1s) on events in the moment (authorizing debit card purchases). You need this approach when you deal with low-latency inference (someone or something is blocked on prediction), and in-the-moment information is vital (inference results cannot be pre-computed).

Near-Line Inference is a special and rare case between batch and real-time. This is required when you need to trigger inference on an event happening and don’t need action to happen right away (no one is blocked). This approach is rare and can almost always be framed as batch or real-time.

The Chime DS/ML team requires real-time models for use cases in which they need to take some immediate action based on inference results (i.e., transaction authorization, peer-to-peer payment authorization, two-factor authentication app login, chatbot, etc.). In these cases, inference results can’t be pre-computed and re-computed in a batch pipeline.

Chime’s first real-time deployed model was used to prevent fraud on Pay Friends. The goal of the model is to prevent bad actors from transferring money and successfully executing social engineering or account take-over. For context, Chime members can instantly send money to each other using Pay Friends.

The ML Platform team architected the system using an HTTP API protocol. Essentially, the ML model was deployed as an inference service, then integrated with Chime’s internal engineering system. Then Chime’s internal engineering system would send paper and transaction information to the ML model inference service, where it would essentially look up a pre-computed set of features within milliseconds, make predictions, and send the predictions back as a response to the cache service.

Along with making and surfacing the predictions to the engineering system, they also tracked these predictions asynchronously through a data stream, where they tracked not only the predictions but also the metadata associated with the predictions (including the features used and any other metadata information that the infrastructure pipeline used to come up with that prediction).

A key component of the real-time infrastructure above is an online feature store. All of Chime’s ML pipelines were for batch inference before this first real-time model. Essentially, they were using the Snowflake data warehouse as a single source of truth to run any of the ML pipelines. To support real-time models, the platform team wanted to support feature lookups in real-time, which means supporting hundreds of features lookups within two-digit millisecond-latency requirements. They also built a Feature Store to reduce drift between training and inference pipelines and provide a consistent feature engineering framework.

Once they launched this model, they wanted to be able to monitor and audit it to enable transparency, the ability to question, and ease of understanding. On top of that, they wanted to create rules and policies, which might be a regulatory requirement in certain situations. A key component within Chime’s monitoring stack is instrumentation. Instrumentation aims to break down the inference pipeline step-by-step and facilitate performance optimizations. This helped them: (1) optimize feature store call time by keeping the caches warm and (2) reduce inference time for tree-based models by optimizing the inference stack.

There are four ways the platform team uses to monitor their ML stack:

System metrics: They track anything related to the system, including how they run the model inference service as a part of their Kubernetes cluster. System metrics help them determine the memory and CPU usage and the health of individual components.

Operational metrics: These metrics include web service latency/uptime and those from AWS (like load balancer).

Custom ML platform metrics: These metrics are provided out-of-the-box to all models (error and success rate, the total time it took to make predictions, etc.)

Feature and model metrics: These metrics include those defined by the data scientists (model and feature quality metrics, offline model metrics), feature store operations, and inference logging.

After building the first real-time model, they started to think about replicating it across different use cases. To scale real-time ML at Chime, they focused heavily on monitoring and alerting:

They ensured that they were not manually creating dashboards and metrics per model using the UI. They wanted to ensure that their observability stack is resilient and can be replicated as models arise.

They used anomaly detection in monitoring metrics to scale different areas of components within their stack.

They also standardize monitoring and alerting across ML models using Infra-as-Code (the observability stack is part of the code itself). This approach provides metrics and dashboards out of the box to data scientists for tracking.

They used a couple of integrations for their monitoring stack, including Arize, Datadog, Terraform, AWS, and PagerDuty.

During the first time the platform team built and integrated real-time models into production, they recognized that there was a lot of one-off work needed to do: They needed to understand the data scientists’ code implementation, containerized it, set up the serving stack, implemented CI/CD, monitoring, logging, etc. These activities can be time-consuming, making them unscalable, given the hundreds of use cases at Chime.

Thus, they built a Python package that provides a standardized way of building model containers and pipelines. Essentially, the entire DS/ML team and the ML platform team would contribute to this package, which would then be shipped to their package repository and available for integration with all the models. As seen in the diagram above, the DS/ML team would work in their model repo and write the code for their data preparation and model development work. Then, they would import the Build Tools package to bring in all of the common functionality that the ML platform wants to provide for this model. The code is automatically built into a Docker image that can be checked into AWS ECR registry.

With this build package, they can share code that’s common across models. The codebase is now much more modular and structured, enabling data scientists to build automatic logging, monitoring, and error handling production-ready pipelines. Overall, it enables the ML platform team to manage 10s to 100s of models in a scalable way.

After scaling their development environment, they then scaled their infrastructure orchestration. As they were working on deploying the training and inference pipelines, they needed to collaborate closely with data scientists to understand the codebase, containerize it, and run it on AWS (while still writing the infrastructure code for it in Terraform).

As seen below, they built a framework for orchestration - where data scientists can check in the logic they want to run. As soon as their code is merged into the main branch, the infrastructure automatically gets orchestrated in different AWS environments (development, staging, production, etc.). This tooling for orchestrating models provides templates to easily define pipelines for training, batch inference, etc., CI/CD to orchestrate pipelines in development and production environments, and the ability to run pipelines on a regular schedule. Overall, this update enables data scientists to self-serve and get the ML platform team out of their development loop.

The talk ended with these five lessons learned:

Invest in building or getting a feature store: Feature store is important to get all the feature values in two-digit millisecond latency so that they can run inference in real-time.

Build a robust framework for instrumentation and load testing: This enables monitoring data pipelines and metrics that help detect issues early in the process. Having it from the get-go is very helpful.

Build monitoring and auditability in design (instead of an afterthought): They structure the code base and integration such that the models have monitoring and auditability built-in.

Build pluggable components and open interfaces: This has enabled them to build the platform incrementally and support diverse use cases (starting with batch training/inference and extending to real-time endpoints and further).

Have infrastructure-as-Code (especially if/when managing infrastructure at a large scale): Doing things in the UI can be unscalable.

5 - Best Practices of Effective ML Teams

What does it take to get the best model into production? We've seen industry-leading ML teams follow some common workflows for dataset management, experimentation, and model management. Carey Phelps from Weights and Biases shared case studies from customers across industries, outlined best practices, and dove into tools and solutions for common pain points. Her main argument is that getting a model into production is a solved problem. Coordinating efforts on ML teams is not.

There are tools for every stage of software development, and it’s pretty clear what that workflow looks like. But for ML development, it’s not as clear since there aren’t standards that we all agree on for the ML workflow:

There’s the data curation phase: collecting, labeling, exploring, cleaning, preparing, and transforming the data. Results from that process include the raw dataset, the training and evaluation datasets, and the pre-processing code.

Next is the modeling phase: training, experimenting, evaluating, and optimizing a new model. A host of artifacts and metadata might be useful to track during that process (model checkpoints, training metrics, engineered features, terminal logs, environment, training code, system metrics, evaluation results, package versions, hyper-parameter sweeps, etc.)

The last phase is deployment: packaging, productionizing, serving, and monitoring the model. This phase has its own metadata and artifacts that would be useful to track, like model predictions, performance metrics, alerts, etc.

This whole process is incredibly cyclical. Once you have a model in development and it’s deployed into production, you are monitoring that model performance, identifying if there are any issues/regressions, going back through the flow of either capturing new data, pre-processing the data in a different way, finding a new model architecture or better hyper-parameters, and constantly iterating on that process.

So what are the common pain points and frustrations for ML teams working on models day-to-day? Carey reasoned that untracked processes are brittle, but our industry hasn’t accepted that’s true for ML models (it’s not obvious that people should be version-controlling their models). Based on case studies from Open AI, Blue River, and Toyota Research Institute, three common challenges that the Weights and Biases team is seeing can be grouped into three categories:

Knowledge management: It’s really difficult to track and compare models if you don’t have an automated system doing it. If you can’t recreate every pipeline step to reproduce a given model, it’s impossible to reliably say how it was created. Teams were frustrated with the tracking and lineage and the slow process of getting results. As you develop solutions, you have to keep in mind how crucial it is to make reporting live and interactive.

Standardization: There are a lot of ad-hoc workflows across the ML teams. That’s natural in the context of hacking a new model in a Jupyter Notebook, but for something that’s destined for production, workflows need to be rock solid. Ad-hoc workflows also naturally lead to siloed results because people don’t have a standardized system or a shared language to talk about the results they are getting. This also limits visibility into the process of running the ML team: someone like an executive or a team leader would have a hard time understanding how the ML team is operating because there’s no visibility into their process.

Tech Barriers: ML teams are frustrated since it’s expensive to maintain internal tools, it is challenging to get the data out of lock-in vendor tools, and it is difficult to deploy off-the-shelf tools with a high barrier to set up.

Carey identified three categories of solutions for these challenges:

Reproducibility: A promising tool needs to capture data lineage and provenance and the whole ML pipeline that leads up to a trained model - having this reliable, automatic system of record.

Visualization: Dashboards need to be fast and interactive. Collaboration needs to happen in a unified workspace to unlock workflow silos. Teams need to have visibility into how ML projects are going.

Fast Integration: The tool needs to be easy to set up with no vendor lock-in and easy exporting. It has to be fast to deploy and intuitive to use.

Ultimately, Weights and Biases (W&B) is building towards an ML system of record (similar to Salesforce for sales or Jira for engineering).

Install the lightweight library: W&B keeps the integration lightweight and straightforward. You only add a couple of lines of code. W&B is infrastructure-agnostic, so you can train on your laptop on a private cluster or cloud machines, as long as you have access to the W&B server. You can also visualize the training results.

Track metrics and artifacts: W&B gives users a simple central place to visualize the progress of model training. It captures system metrics out of the box, the exact code versions, and any uncommitted changes. It tracks artifacts like datasets and model versions, enabling users to iterate quickly with its interactive dashboards.

Compare experiments fast: You can compare runs. W&B allows you to visualize relationships between hyper-parameters and metrics (all saved in the same standard persistent system of record). It’s easy to query and filter to find the best model or a baseline to compare your new model against.

Optimize hyper-parameters easily: W&B has a tool to scale up training and launch dozens of jobs in parallel to report back to the single central dashboard. That’s critical for having the team be able to move quickly.

Collaborate on reproducible models: Reports allow people to annotate their work and take notes on their progress. They then have a live dashboard to describe what they are working on and show charts that will update in real-time. It’s possible to comment directly on reports with charts, giving team members access to results they might not have seen otherwise.

In conclusion, W&B is building standardized tools to help teams be more effective: data versioning and data exploration for data curation, experiment tracking for model development, and model registry and production monitoring for model deployment. Effective ML teams now have automated tooling to capture progress.

6 - From Algorithms to Applications

Victor Pereboom, CTO of UbiOps, shared recent developments and challenges around the deployment and management of ML models, especially MLOps, not just in cloud environments but also on-prem (where security is a lot stricter).

In the last two or three years, we have seen a big jump in the maturity of many ML applications needed. Organizations are confronted with a new reality where the implementation of AI has a significant impact on competitiveness, market share, and profitability. They can simply not stay behind and must look at ways to run and operate ML models in production. ML and AI need to be treated like any other software application with the same lifecycle, except for the fact that managing the ML lifecycle has some additional difficulties.

As a result, there’s a need for tools and best practices to go from building/testing to deploying/monitoring models in an operational setting. The MLOps ecosystem is growing rapidly with new technologies that solve specific ML-related lifecycle management problems. This also helps bridge the knowledge gap in transforming AI into scalable and live services and makes a link between data science and IT teams inside organizations.

Turning AI into a live application involves not just a model. Analytics applications can consist of many parts and pieces with their individual lifecycles (data pre-processing, connectors to databases, etc.) These parts need to be in the same workflow, even though they usually need their own dependencies and be reused between different applications. That raises the question: “Who is responsible for deployment and maintenance?”

Victor zoomed in on the deployment and serving challenge, the part of the MLOps lifecycle that gets less attention than the rest. It’s the part with the least to do with data science code. It’s the link to run the data science code on the infrastructure (on-prem, the cloud, or any other compute infrastructure). It’s the intermediate layer where you see the biggest skills and knowledge gap between teams inside organizations.

What makes this difficult for most teams? Many teams can do data science and research, but few can set up and manage the software and IT side. This complexity gets offloaded to data science teams, who are expected to master this. It involves a radically different technology stack: Containers, Kubernetes, Networking, API management, etc. The challenge is how to make it easy to deploy things without compromising security and resilience.

In terms of model management challenges:

If you have an ML application with different logs, you first want to manage them in a central model repository, where you want to apply version and revision management.

You want to separate the data science code from the IT infrastructure. A great way to do that is to turn models into individual microservices with their own APIs so that you can make algorithms accessible from all your applications at the same time.

You want to add logging, monitoring, and governance capabilities to manage how your models will perform in production.

You can also look at integration with CI/CD workflows (a common practice in software) to manage the deployment workflow with different checks and balances. You want to look at the workflow management for applications that need a sequence of steps and ensure that the whole workflow gets completed.

In terms of serving, scalability, and reliability challenges:

In the end, with production applications, companies care most about reliability, availability, and speed. Serving a live system requires setting up Kubernetes microservices to ensure that all the pieces of code are packaged in their own runtime and have the right dependencies (so these microservices can scale as soon as the application load goes up). A single Docker with your model in it won’t cut it because that’s not reliable and robust enough.

It’s important to maintain flexibility for data scientists to configure runtimes and manage dependencies. There are many different tools and frameworks to develop code for ML applications, so data scientists need to have the flexibility without interfering with the IT behind the scene (the separation of concern).

It’s crucial to make optimal use of the available compute capacity. Using Kubernetes enables you to granularly assign compute capacity to certain containers with ML models in them. As more ML models use GPUs, this practice will lead to huge performance gain (since managing GPU resources is expensive) as you can reassign hardware to where it’s most needed.

In terms of challenges in secure and on-prem environments, we are seeing an increasing need for MLOps solutions in offline and private, secure cloud environments. There is a need for a more strict tradeoff between flexibility vs. security (i.e., government, healthcare, energy, etc.). In these environments, there’s a whole set of additional challenges that need to be addressed: restrictions on runtime and dependencies, more stringent Test-Acceptance-Production flow, additional access controls and permission management, network and firewall restrictions, hardware limitations, etc. Especially in Europe, there’s strict GDPR compliance in handling data. Usually, the best way to make things more secure is to automate a lot of things in the workflow to avoid human error (which is often accomplished with different checks and tests in CI/CD workflows).

Observability and monitoring of AI/ML models are becoming more important, especially around ethical and trustworthy AI. Specific ML security comes from putting in place good observability and data observation tools to keep track of changes in models and data. Looking at this whole MLOps landscape, Victor concluded that there is a new stack of MLOps technologies on the rise and a shared understanding that there is a need for interoperability and modularity between these tools.

7 - How “Reasonable Scale” ML Teams Keep Track Of Their Projects

The Neptune AI team gets the chance to talk to many ML teams of different sizes, running projects in different ML areas. They often hear that it’s not easy for them to find frameworks and best practices for managing, versioning, and organizing experiments. Parth Tiwary from Neptune AI shared some insights and best practices that they learned from those teams – including what, why, and how they track in their ML projects.

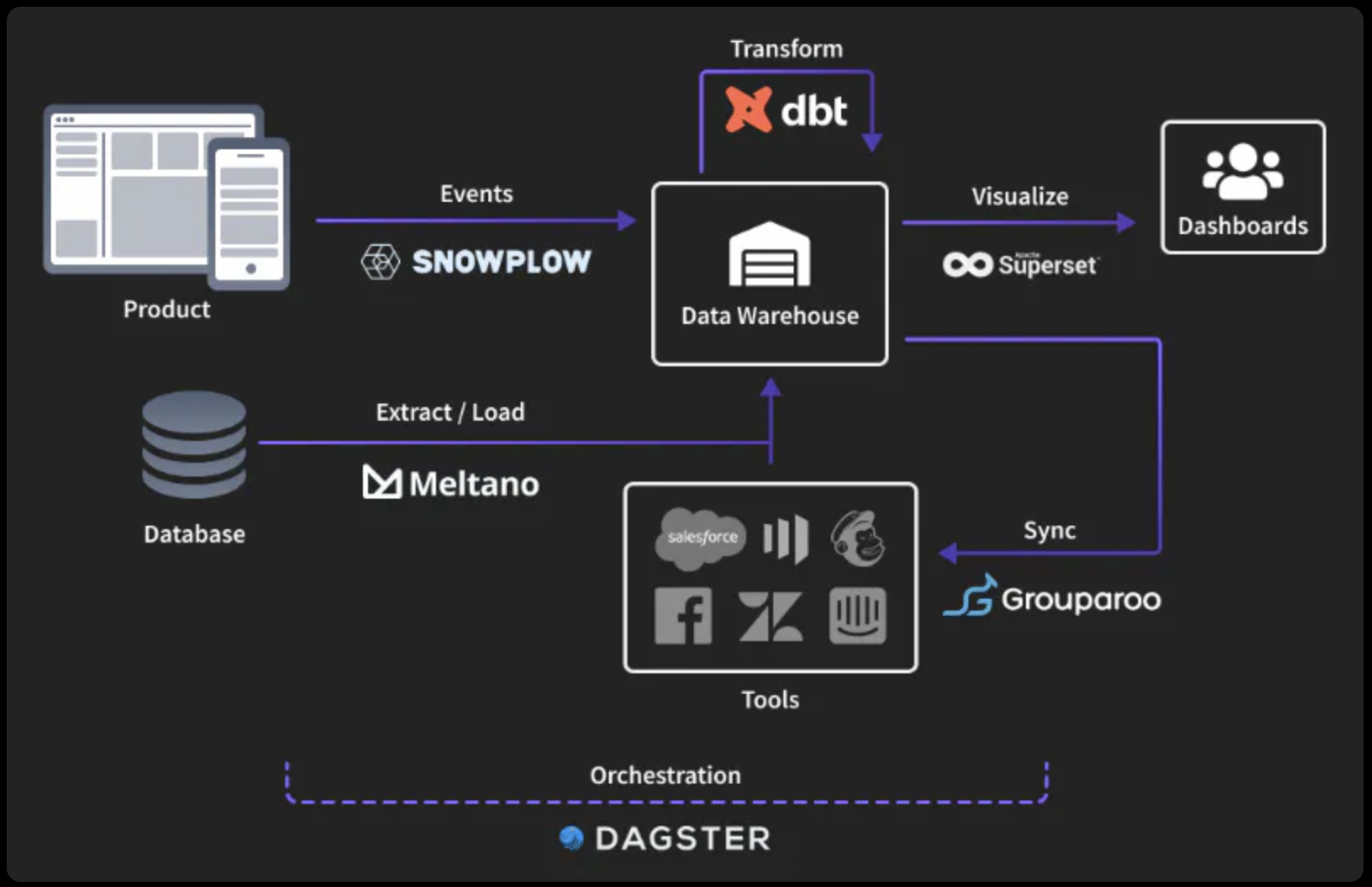

An underlying problem with the MLOps ecosystem is the lack of standardization, which can be attributed to the field's infancy. MLOps is a rapidly growing ecosystem where we are effectively adding hundreds of tools catering to several phases of the MLOps lifecycle every year. It’s difficult to keep track of what tooling you should be using. At the same time, as you end up composing your MLOps lifecycle from an array of tools, you end up scattering your data across products. This results in a situation where you do not have that data centralized from all the phases and can’t draw insights from that data to collaborate in an effective manner.

This leads the Neptune team to motivate the idea that you can only make incremental gains on models in production if you have overall control over the entire model lifecycle, from training to production, by imposing some level of reproducibility via a central source of truth (where you track model and data lineage).

A standard MLOps lifecycle resembles the one above:

It typically starts with the data validation and data preparation phases, where you perform data analysis to evaluate the feasibility of the data to train your models. After model training, you build a whole logic around model evaluation and model validation to identify the best models to put into production.

Moving to the operational side, you have a deployment pipeline to expose the production model as an API endpoint within a prediction service.

At this point, you want to keep monitoring how your model will be working on real-world data on certain metrics. As soon as your model’s performance deteriorates on that metrics (or goes below/above a certain threshold), you want to retrain and reevaluate your model on those metrics.

More often than not, many companies stitch together an MLOps lifecycle that composes of various products. Not all of these products go through similar mechanisms of standardizing how they store the data, making it inefficient to organize and collaborate on that data. This motivates the idea that you need to build an abstraction layer on all these phases, where you centrally fetch data from these phases and organize them in a way that you can either debug/retrace errors or iterate on your models effectively.

There are various approaches that ML teams use in-house to organize their data as they start to scale and grow in team and model size - from coming up with specific naming conventions/folder structures and branch naming, to building in-house tools and maintaining open-source and end-to-end platforms. These approaches require manual effort, lead to human error, provide limited collaboration, and do not scale well.

Here are a couple of big pain points that these ML teams encounter quite often:

Organizing metadata: As they work with different MLOps phases, they have a mental model of how the data should look and how to use that data to draw out insights. Thus, they always look for a tool or a platform where they have the flexibility to organize the data to represent the already existing mental model of that data.

Reproducibility: How do they reproduce either certain experiments or certain phases of their MLOps lifecycle? This relates back to how they organize the data and standardize model experiments.

Collaboration: The ability to see what your teammates are working on and share your own experiments/insights/workflows is crucial in making gains in order to effectively work on a problem and improve it.

Maintenance overheads from self-hosted or in-house solutions: Keeping these solutions persistent (if they are hosting them) on their own is a big challenge.

Neptune provides a metadata store for MLOps - the single place of truth where all model information is stored, backed up, and accessible to your team. This store organizes metadata from several phases of the MLOps lifecycle: data versioning, experiment tracking, model registry, monitoring environment, model finetuning, and automatic retraining. You have a lot of analytical abstractions and in-house features, which let you extract the most insightful and actionable properties of that data and use them in several processes within your organization.

8 - Challenges in Monitoring Production ML Pipelines

Knowing when to retrain models in production is hard due to delays in feedback or labels for live predictions and distributed logic across different pipelines. As a result, users monitor thousands of statistical measures on feature and output spaces and trigger alerts whenever any measure deviates from a baseline, leading to many (ignored) false-positive signals to retrain models. Shreya Shankar discussed these challenges in greater detail and proposed preliminary solutions to improve precision in retraining alerts.

Dealing with ML pipelines is challenging. These pipelines are made up of multiple components, which you can choose from a stack of over 200 tools. There’s a separation between online and offline stages in many organizations. Production ML, therefore, is an on-call engineer’s biggest nightmare.

In addition to this complex technical stack, many problems come up post-deployment: corrupted upstream data is not meeting SLAs, the model developer is on leave and then a customer complains about the prediction quality, somebody makes an assumption at training time that doesn’t hold during inference time, data “drifts” over time, etc.

It’s impractical to catch all bugs before they happen, but when we think there’s a bug in an ML system, we’d like to be able to minimize the downtime somehow. When thinking about building observability for ML systems, you want to help engineers detect bugs accurately and diagnose those bugs as well. This is hard in practice because you need to support a wide variety of skillsets for engineers and data scientists.

Shreya mentioned that there are currently three types of ML data management solutions:

Pre-training: What do I need to start training a model? How do I materialize the inputs (to be given to data scientists) so that they can train models? This type includes feature stores, ETL pipelining, etc.

Experiment tracking: What’s the best model for a pipeline? How do I convince other stakeholders that this model is the best one? This type includes tools like mlfow, wandb, etc.

Observability: What does it mean to do ML performance monitoring? How do I come up with debugging interfaces for production models? What’s the equivalent of Prometheus or Grafana for ML systems?

Observability can be defined as “the power to ask new questions of your system without having to ship new code or gather new data.” You want to log raw structured data at every component of your end-to-end pipeline so that people can query it afterward to debug. While monitoring is around known unknowns and actionable alerts (how to monitor the accuracy of models), observability is around unknown unknowns (how to ask new questions).

At the moment, we have very imprecise definitions and alert fatigue for model performance and model quality. We know we want to measure accuracy or F1 score, but we cannot do this in real-time (maybe because of feedback delay, label lag, or something else). We want our observability system to tell us accurately when to retrain our models or when something is broken. On the interface side, we have beautiful dashboards with 100s of graphs that are not actionable. Most organizations have no end-to-end pipeline instrumentation and no ability to query and aggregate structured logs post-hoc.

In defining a “North Star” observability system, we want the user to have the flexibility while abstracting away the nitty-gritty details of data management.

Maybe that means allowing them to have a custom loss function (or something they want to track in real-time). Perhaps we can have a library of pre-defined Python-based metric definitions.

We need to account that predictions are made separately from labels or feedback. There should be at least two different streams to deal with label and prediction delays.

Automatic logging of raw events throughout the pipeline is also important because the tech stack is complex and diverse. This means asking questions like, “How do we make a tool that is interoperable with the highly customized end-to-end stack?”

A simple querying interface for logs is a nice addition.

Shreya’s talk focuses on the second bullet point above around challenges in monitoring ML with feedback delays. Various reasons are making this a hard problem:

Determining real-time performance requires labels, which are not always available post-deployment. For example, if an organization makes a bunch of predictions, then the humans might annotate them at the end of every month or every quarter cycle based on certain business metrics.

When we see a performance drop in the model, is that temporary or forever?

In the case of recommendation systems, we have degenerate feedback loops when predictions influence feedback.

There are two levels of sophistication for tracking data shift: The straw-man approach tracks the means and quantiles of features and outputs. The “I took a stat class” approach tracks key statistics such as MMD, KS, Chi-Square test, etc. Both approaches are label-unaware and don’t use all the information we have. Can we estimate the performance of our mode better instead of relying on these methods for tracking shifts?

For context, the figure above shows a high-level architecture of a generic end-to-end machine learning pipeline. The inference component generates predictions, while the feedback component produces labels. Feedback comes with some delay, impacting real-time accuracy scores.

In her most recent VLDB paper, Shreya breaks down real-time ML performance monitoring challenges into “coarse-grained” and “fine-grained” monitoring.

Coarse-grained metrics map most closely to business value and require labels (e.g., accuracy).

Fine-grained information is useful to indicate or explain changes in coarse-grained metrics and does not require labels (e.g., K-S test statistic between a feature’s distribution in the training set and its live distribution at inference time).

An ML observability tool should primarily alert the user on changes in coarse-grained metrics, or detect ML performance drops, and show fine-grained information as a means for diagnosing and reacting to ML issues—e.g., which features diverged most and how the training set should change in response. I’d recommend reading the paper to understand how coarse-grained monitoring help detect ML performance issues and how fine-grained monitoring can help diagnose their root causes in further detail.

At the moment, Shreya is working on mltrace, a lightweight “bolt-on” ML observability tool with projects in several research areas such as data systems (mitigating effects of feedback delays on real-time ML performance + using differential dataflow to compute streaming ML metrics quickly and efficiently at scale), machine learning (creating streaming ML benchmarks + building repository of tasks with “temporally evolving tabular data”), and human-computer interaction (conducting interview study on best practices in CI/CD for ML + visualizing large-scale data drift). If interested, you should reach out to her to contribute!

9 - Redesigning Etsy’s ML Platform

Etsy uses ML models to create personalized experiences for millions of buyers around the world with state-of-the-art search, ads, and recommendations. Behind that success is an ML platform that was recently rebuilt from the ground up to help ML practitioners prototype, train, and deploy ML models at scale better. Kyle Gallatin and Rob Miles discussed the design principles, implementation, and challenges of building Etsy’s next-generation ML platform.

The ML Platform team at Etsy supports its ML experiments by developing and maintaining the technical infrastructure that Etsy’s ML practitioners rely on to prototype, train, and deploy ML models at scale. When they built Etsy’s first ML platform back in 2017, Etsy’s data science team was growing, and a lack of mature, enterprise-scale solutions for managing the models they were building threatened to become a bottleneck for the ML model delivery. The Platform team decided to build systems in-house to present Etsy’s ML practitioners with a consistent, end-to-end interface for training, versioning, packaging, and deploying the models they were building in their preferred ML modeling libraries.

The journey of Etsy’s ML platform started in 2017. Before that, ML models were created on Etsy for making product recommendations, but those models were exclusively batch inference. More importantly, the scientists creating them had to build all of their infrastructure from scratch. In 2017, Etsy acquired a company specializing in the Search ML space. With it came a need for a step up in the number of ML models and a new online serving of those models. There was generally a recognition that a platform can allow the scientists to focus on where they add the most value to their modeling work.

Etsy’s ML Platform V1 has a few interesting characteristics:

All of the components were built in-house: In 2017, very few open-source frameworks and tools were available.

The ML framework that underpinned the platform had flexible design principles: It was framework-agnostic, looked to define high-level interfaces, and provided common functionality like model versioning and packaging.

The platform was adapted to the cloud: Etsy was previously on in-house data centers and quickly moved to Google Cloud. It could offer features like Elastic Compute to the growing data science team.

Rob then walked through the major pains that the platform users started to feel:

Since everything was built in-house, new data scientists had to spend weeks learning these frameworks and systems to be productive. The available open-source and cloud frameworks matured more, so these scientists expected to be able to use the same tools that they were familiar with in college or from previous jobs.

While the in-house framework had good design principles, it was a large monolithic codebase. Over time, it lacked good engineering practices and slowed down development. Scientists became dependent on the ML platform team to be able to experiment with new ML frameworks, which started to slow down innovation.

It was not cloud-first and couldn’t take advantage of the increasing number of ML services available to Etsy via Google Cloud (that could help accelerate the provision of new capabilities).

These pain points led the ML Platform team to kick off a project in 2020 to redesign the ML platform from the ground up. They agree on the following architectural principles that would underpin the new platform:

They made the decision to build a flexible platform with first-class support for TensorFlow. There was a growing consensus that TensorFlow was the tool of choice for most ML applications, so they wanted to take advantage of its native tooling. However, there was also a concern about putting all of their eggs in one basket, so they made sure the platform continued to be flexible enough to support other ML frameworks.

They made the decision to build for Google Cloud. The risk of moving away from Google Cloud in the near future was sufficiently small that they didn’t need to worry about vendor lock-in and instead wanted to take advantage of Google’s managed services whenever it made sense.

They made the decision to be careful with abstractions. Their power users were particularly clear that they wanted direct access to the tools and frameworks. Thus, the Platform team must be careful in creating any abstractions that could slow down or constrain those users. However, as they pursue a goal of ML democratization, they also recognize that they may need to create some abstractions to help less-proficient users.

Kyle then dissected Etsy’s ML Platform V2, which composes of three major components:

1 - Training and Prototyping

Their training and prototyping platform largely relies on Google Cloud services like Vertex AI and Dataflow, where customers can experiment freely with the ML framework of their choice. These services let customers easily leverage complex ML infrastructure (such as GPUs) through comfortable interfaces like Jupyter Notebooks. Massive extract transform load (ETL) jobs can be run through Dataflow, while complex training jobs of any form can be submitted to Vertex AI for optimization.

While the ML Platform provides first-class support for TensorFlow as an ML modeling framework, customers can experiment with any model using ad hoc notebooks or managed training code and in-house Python distributions.

2 - Model Serving

To deploy models for inference, customers typically create stateless ML microservices deployed in their Kubernetes cluster to serve requests from Etsy’s website or mobile app. The Platform team manages these deployments through an in-house control plane, the Model Management Service, which provides customers with a simple UI to manage their model deployments.

The Platform team also extended the Model Management Service to support two additional open-source serving frameworks: TensorFlow Serving and Seldon Core. TensorFlow Serving provides a standard, repeatable way of deploying TensorFlow models in containers, while Seldon Core lets customers write custom ML inference code for other use cases. The ability to deploy both of these new solutions through the Model Management Service aligns with Etsy’s principle of being flexible but still TensorFlow-first.

3 - Workflow Orchestration

Maintaining up-to-date, user-facing models requires robust pipelines for retraining and deployment. While Airflow is Etsy’s primary choice for general workflow orchestration, Kubeflow provides many ML native features and is already being leveraged internally by the ML platform team. They also wanted to complement their first-class TensorFlow support by introducing TFX pipelines and other TensorFlow-native frameworks.

Moving to GCP’s Vertex AI Pipelines lets ML practitioners develop and test pipelines using either the Kubeflow or TFX SDK, based on their chosen model framework and preference. The time it takes for customers to write, test, and validate pipelines has dropped significantly. ML practitioners can now deploy containerized ML pipelines that easily integrate with other cloud ML services and test directed acyclic graphs (DAGs) locally, further speeding up the development feedback loop.

ML platform V2 customers have already experienced dramatic boosts in productivity. They estimate a ~50% reduction in the time it takes to go from idea to live ML experiment. A single product team was able to complete over 2000 offline experiments in Q1 alone. Using services like Google’s Vertex AI, ML practitioners can now prototype new model architectures in days rather than weeks and launch dozens of hyperparameter tuning experiments with a single command.

Kyle concluded the talk with key challenges and learnings:

Adoption has primarily been slow. There’s a lot of inertia in the old platform. A greater portion of the ML practitioner’s time is spent iterating on existing models rather than designing completely new models or new workflows. However, early adopters have had success stories, and power users (who’re already migrating workflows) have reported large productivity gains from TensorFlow, Vertex AI, and Kubeflow.

The more decentralized ML code means that teams with fewer resources expect expert-level support on new external tools. This drains the Platform team’s resources when they are expected to take ownership or consult on ML-specific issues outside of their primary domain.

To keep the scientists and engineers in the loop, the Platform team has started to treat the platform as a product, hosted announcements/office hours, and assigned product evangelists on various teams to promote the platform internally. These activities have helped increase the adoption and drive success for both ML and the business at Etsy.

I look forward to events like this that foster active conversations about best practices and insights on developing and implementing enterprise ML strategies as the broader ML community grows. If you are curious and excited about the future of the modern ML stack, please reach out to trade notes and tell me more at james.le@superb-ai.com! 🎆

Bonus: If interested, you can watch my talk below!