Introduction

The biggest issue facing machine learning is how to put the system into production. Machine learning systems differ from traditional software in two fundamental ways:

Machine learning is never fully deterministic; therefore, the performance of an ML system can’t be evaluated against a strict specification. Instead, it should always be evaluated against application-specific metrics (false positives/negatives, churn rates, sales)

The behavior of a machine learning system is determined more by the data used for training than the model used for inference. Therefore, data collection, data wrangling, pipeline management, model retraining, and model deployment are tasks that will never go away.

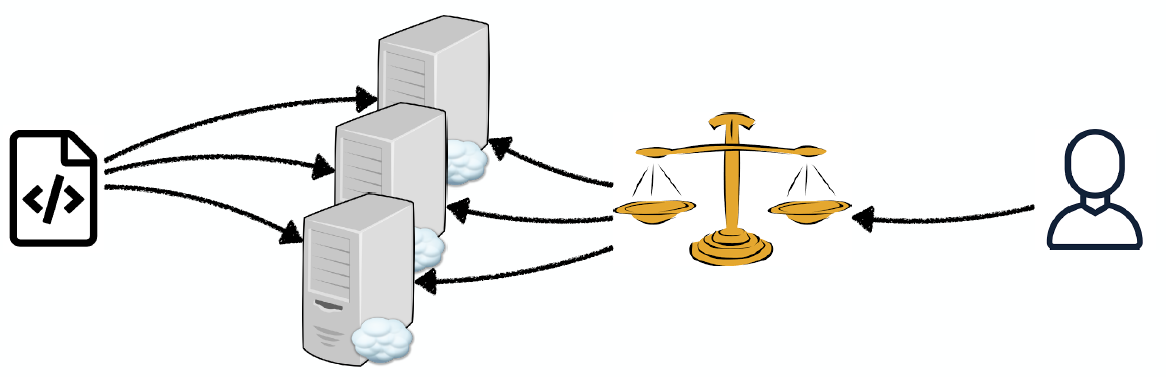

The diagram above displays a conceptual framework for the different components of a Machine Learning System:

Prediction System: This is the system that involves code to process input data, to construct networks with trained weights, and to make predictions.

Training System: This is the system with the code to process raw data, run experiments, and manage results.

Serving System: The goal of any prediction system is to be deployed into the serving system. Its purpose is to serve predictions and to scale to demand.

Training and Validation Data is used in conjunction with the training system to generate the prediction system.

At production time, we have Production Data that has not been seen before and can only be served by the serving system.

The prediction system should be tested by functionality to catch code regressions and by validation to catch model regressions.

The training system should have its tests to catch upstream regressions (change in data sources, upgrade of dependencies)

For production data, we need monitoring that raises alert to downtime, errors, distribution shifts, etc. and catches service and data regressions.

To conceptualize this framework, there is a significant paper from Google called ML Test Score — A Rubric for Production Readiness and Technical Debt Reduction — which is an exhaustive framework/checklist from practitioners at Google. It is a follow-up to previous work from Google, such as (1) Hidden Technical Debt in ML Systems, (2) ML: The High-Interest Credit Card of Technical Debt, and (3) Rules of ML: Best Practices for ML Engineering.

As seen in Figure 1 from the paper above, ML system testing is more complex a challenge than testing manually coded systems, since ML system behavior depends strongly on data and models that cannot be sharply specified a priori. One way to see this is to consider ML training as analogous to the compilation, where the source is both code and training data. By that analogy, training data needs testing like code, and a trained ML model needs production practices like a binary does, such as debuggability, rollbacks, and monitoring.

The paper presents a rubric as a set of 28 actionable tests and offers a scoring system to measure how ready for production a given machine learning system is. This rubric intends to cover a range from a team just starting with machine learning up through tests that even a well-established team may find challenging. The scoring system provides a vector for incentivizing ML system developers to achieve stable levels of reliability by providing a clear indicator of readiness and clear guidelines for how to improve.

I recently attended the Full-Stack Deep Learning Bootcamp in the UC Berkeley campus, which is a wonderful course that teaches full-stack production deep learning. One of the lectures delivered by Sergey Karayev provided excellent coverage of model testing and deployment. In this blog post, I would like to share the best practices from the lecture that allow you to deploy machine learning models in production as efficiently as possible.

1 — Testing and Continuous Integration

This section covers two related topics:

Unit Tests and Integration Tests — Tests for specific module functionality and the whole system, respectively.

Continuous Integration — An environment where tests are run every time a new code is pushed to the repository before the updated model is deployed.

Unit and Integration Testing

Unit and integration tests are designed for the training system and the prediction system. Here are the specific metrics that Google recommends:

All input feature code is tested: Bugs in features may be almost impossible to detect once they have entered the data generation process, especially if they are represented in both training and test data.

Every model specification undergoes a code review and is checked in to a repository: Proper version control of the model specification can help make training auditable and improve reproducibility.

Offline proxy metrics correlate with actual online impact metrics: A strong understanding of the relationship between these offline proxy metrics and the real impact metrics is needed to ensure that a better scoring model will result in a better production system.

Model quality is sufficient on all essential data slices: Examining sliced data avoids having fine-grained quality issues masked by a global summary metric. This class of problems often arises from a fault in the collection of training data, that caused an important set of training data to be lost or late.

Training is reproducible: Model training is often not reproducible in practice, especially when working with non-convex methods such as deep learning or even random forests. This can manifest as a change in aggregate metrics across an entire dataset, or, even if the aggregate performance appears the same from run to run, as changes on individual examples. Your tests should be able to remove this non-deterministic nature of the model training process.

Model specification code is unit tested: Unit tests should run quickly and require no external dependencies, but model training is often a prolonged process that involves pulling in lots of data from many sources. You should have at least two kinds of model tests: tests of API usage and tests of algorithmic correctness.

The full ML pipeline is integration tested: There must be a fully automated test that runs regularly and exercises the entire ML pipeline, validating that data and code can successfully move through each stage and that the resulting model performs well.

Model quality is validated before attempting to serve it: After a model is trained but before it affects real traffic, an automated system needs to inspect it and verify that its quality is sufficient; that system must either bless the model or veto it, terminating its entry to the production environment.

Continuous Integration

In modern software engineering, continuous integration is an integral part of the best practice to manage the life cycle of development efforts systematically. With a CI engine, the method requires developers to integrate/commit their code into a shared repository often. Each commit triggers an automatic build of the system, followed by running a pre-defined test suite. The engineer receives a pass/fail signal from each commit, which guarantees that every commit that gets a pass signal satisfies properties that are necessary for product deployment.

Developing machine learning models is no different, as the full life cycle involves design, implementation, tuning, testing, and deployment. As machine learning models are more tightly integrated with traditional software stacks, it becomes increasingly crucial for the machine learning development lifecycle to be managed systematically following a rigid engineering discipline — which means using continuous integration.

A quick survey of continuous integration tools yields several options: CircleCI, Travis CI, Jenkins, and Buildkite.

Both CircleCI and TravisCI are SaaS products for continuous integration that can integrate with your repository. Every push kicks off a cloud job, which can be defined as commands in a Docker container (which is discussed below) and can store results for later review. There are no GPUs supported.

Jenkins and Buildkite are friendly options for running continuous integration on your hardware, in the cloud, or a mixture of both. They are very flexible options for a scheduled training test, which allows you to use your GPUs.

2 — Containerization

Machine Learning in Production is very different from Machine Learning in Concept. Mostly, there are lots of waste in the development lifecycle:

Inconsistent environments across team members

Wasted time configuring Python libraries

Lost time configuring system packages

Additional compute resources not easily accessible for all engineers

Every new engagement is a new project starting from scratch which requires time to get set up

Local development environment doesn’t match customer production environments which leads to unforeseen issues late in the delivery cycle

There comes Docker to the rescue! It is a computer program that performs operating-system-level virtualization, also known as containerization. What is a container, you might ask? It is a standardized unit of fully packaged software used for local development, shipping code, and deploying system. The best way to describe it intuitively is to think of a process that is surrounded by its filesystem. You run one or a few related processes, and they see a whole filesystem, not shared by anyone. This makes containers extremely portable, as they are detached from the underlying hardware and the platform that runs them; they are very lightweight, as a minimal amount of data needs to be included, and they are secure, as the exposed attack surface of a container is extremely small.

Note here that containers are different from virtual machines.

Virtual machines require the hypervisor to virtualize a full hardware stack. There are also multiple guest operating systems, making them large and more extended to boot. This is what AWS / GCP / Azure cloud instances are.

Containers, on the other hand, require no hypervisor/hardware virtualization. All containers share the same host kernel. There are dedicated isolated user-space environments, making them much smaller in size and faster to boot.

Containers are far from new; Google has been using its container technology for years. Other Linux container technologies have been around for many years. So why is Docker all of the sudden gaining steam?

Timing: It was first released in 2013 at the height of the DevOps craze at the time where there are more and more software engineers entering the market.

UX: It has the best developer-friendly abstraction layer to date. The Dockerfile and Docker image framework makes it easy for reproducibility purposes.

Ecosystem: It has the DockerHub for community-contributed images. It’s incredibly easy to search for images that meet your needs, ready to pull down and use with little-to-no modification.

Marketing: The container ship analogy is easy to understand. Plus, who doesn’t love the Docker whale? :)

In brief, you should familiarize with these basic concepts: (1) Dockerfile defines how to build an image, (2) Image is a built packaged environment, (3) Container is where images are run inside of, (4) Repository hosts different versions of an image, and (5) Registry is a set of repositories. If you want to dig deeper into Docker, I would recommend reading this article from Preethi Kasireddy and this cheatsheet from Aymen El Amri.

Though Docker presents on how to deal with each of the individual microservices, we also need an orchestrator to handle the whole cluster of services. For that, Kubernetes is the open-source winner, and it has excellent support from the leading cloud vendors. I won’t discuss Kubernetes here, but this article from Jeremy Jordan will do justice. In general, as machine learning engineers, we want to use Docker to package up our dependencies, but we don’t necessarily need Kubernetes unless we are doing something more complicated interfacing with other services.

3 — Web Deployment

To deploy any sort of prediction system to a serving system, you would want to ensure that:

Models are tested via a canary process before they enter production serving environments: One recurring problem that canarying can help catch is mismatches between model artifacts and serving infrastructure. If you want to mitigate the mismatch issue, one approach is testing that a model successfully loads into production serving binaries and that inference on production input data succeeds. To minimize the new-model risk more generally, one can turn up new models gradually, running old and new models concurrently, with new models only seeing a small fraction of traffic, progressively increased as the new model is observed to behave sanely.

Models can be quickly and safely rolled back to a previous serving version: A model “rollback” procedure is a vital part of incident response to many of the issues that can be detected by the monitoring discussed later on. Being able to revert to a previously known-good state quickly is as crucial with ML models as with any other aspect of a serving system.

Specifically, for web deployment, you need to be familiar with the concept of REST API. This means serving predictions in response to the canonically-formatted HTTP requests. The web server can then run and call the prediction system. You have a couple of options to accomplish this:

You can deploy the code to Virtual Machines, and then scale by adding instances.

You can deploy the code as containers, and then scale via orchestration.

You can deploy the code as a “server-less function.”

Or you can deploy the code via a model serving solution.

Deploy Code to Cloud Instances

The idea here is that you push your code to virtual machines like EC2 instances or Google Cloud Platform. Each instance has to be provisioned with dependencies and app code. The number of instances is managed manually or via auto-scaling. Finally, the load balancer sends user traffic to the instances. There are two cons to this approach:

Provisioning can be brittle since you are doing it yourselves (no Docker here).

You might pay for instances even when you are not using them (auto-scaling does help in this case).

Deploy Docker Containers to Cloud Instances

The idea here is that the app code and dependencies are packaged into Docker containers. Then Kubernetes or alternatives (AWS Fargate) orchestrates containers (DB/workers/webservers / etc.) The con is that you are still managing your servers and paying for uptime, not compute-time.

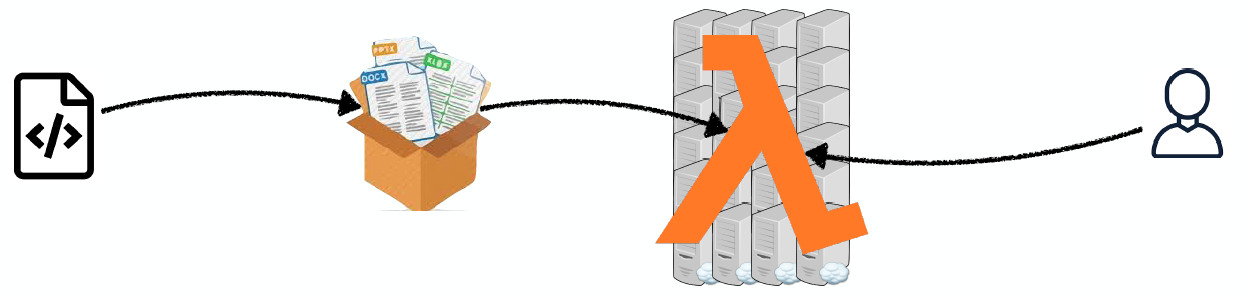

Deploy Serverless Functions

The idea here is that the app code and dependencies are packaged into .zip files, with a single entry point function. Then all the major cloud providers such as AWS Lambda, Google Cloud Functions, or Azure Functions will manage everything else: instant scaling to 10,000+ requests per second, load balancing, etc.

The good thing is that you only pay for compute-time. Furthermore, this approach lowers your DevOps load, as you do not own any servers.

The tradeoff is that you have to work with severe constraints: (1) Your entire deployment package has to fit within 500MB, < 5 min execution, < 3GB memory on AWS Lambda; (2) You can only do CPU execution.

You can also do canarying — which makes it easy to have two versions of Lambda functions in production and to start sending low volume traffic to one.

You can also do rollback — which makes it easy to switch back to the previous version of the function.

Deploy via Model Serving

These are web deployment options specialized for ML models: TensorFlow Serving from Google, Model Server for MXNet from Amazon, Clipper from Berkeley RISE Lab, and SaaS solutions like Algorithmia. In general, the most important feature is their ability to batch requests for GPU inference.

Tensorflow Serving essentially allows you to serve Tensorflow predictions at high throughput. It is used by Google and its “all-in-one” ML offerings (for example, Google Cloud API). This is probably an overkill solution unless you know you need to use GPU for inference.

MXNet Model Server is Amazon’s answer to Tensorflow Serving, which is a part of their SageMaker “all-in-one” ML offering.

Clipper is an open-source model serving via REST, using Docker. There are some nice-to-haves on top of the basics, for example, “state-of-the-art bandit and ensemble methods to intelligently select and combine predictions.” This project is probably too early to be useful (unless you know why you need it).

Some startups promise effortless model serving, for example, Algorithmia. First, you train your models with the framework of your choice. Then, a simple git push into the AI Layer makes your model ready for scale. Finally, the AI Layer manages the hardware and makes the model available as an API. This includes a couple of things like the Lambda function and load balancer.

Here are the key takeaways:

If you are making CPU inference, you can get away with scaling by launching more servers (Docker), or going serverless (AWS Lambda).

If you are using GPU inference, things like TF Serving and Clipper become useful with features such as adaptive batching.

4 — Hardware and Mobile Deployment

There are two significant problems when it comes to hardware and mobile deployment:

Embedded and mobile devices have low-processor with little memory, which makes the process slow and expensive to compute. Often, we can try some tricks such as reducing network size, quantizing the weights, and distilling knowledge.

Embedded and mobile PyTorch/TensorFlow frameworks are less fully featured than the full PyTorch/TensorFlow frameworks. Therefore, we have to be careful with the model architecture. An alternative option is using the interchange format.

Let’s explore some of these approaches in more detail:

Mobile Frameworks

Mobile machine learning is still in its infancy. As such, new frameworks are regularly in flux.

Tensorflow Lite provides the framework for a trained TensorFlow model to be compressed and deployed to a mobile or embedded application. Interfacing with the TensorFlow Lite Interpreter, the app can then utilize the inference-making potential of the pre-trained model for its purposes. In this way, TensorFlow Lite works as a complement to TensorFlow. The computationally expensive process of training can still be performed by TensorFlow in the environment that best suits it (personal server, cloud, over-clocked computer) TensorFlow Lite then takes the resulting model (frozen graph, SavedModel, or HDF5 model) as input, packages, deploys, and then interprets it in the client application, handling the resource-conserving optimizations along the way.

PyTorch Mobile is a relatively new framework for helping mobile developers and machine learning engineers embed PyTorch models on-device. Currently, it allows any TorchScript model to run directly inside iOS and Android applications. PyTorch Mobile’s initial release supports many different quantization techniques, which shrink model sizes without significantly affect performance. PyTorch Mobile also allows developers to directly convert a PyTorch model to a mobile-ready format, without needing to work through other tools/frameworks. Considering that the framework is still in an experimental build, there are a few notable limitations: (1) slow for medium to large models, (2) no GPU support, and (3) requires developers to write in Objective C or C++.

Core ML was released by Apple back in 2017. It is optimized for on-device performance, which minimizes a model’s memory footprint and power consumption. Running strictly on the device also ensures that user data is kept secure, and the app runs even in the absence of a network connection. Generally speaking, it is straightforward to use with just a few lines of code needed to integrate a complete ML model into your device. The downside is that you can only make the model inference, as no model training is possible.

ML Kit was announced by Google Firebase in 2018. It enables developers to utilize ML in mobile apps either with (1) inference in the cloud via API, or (2) inference on-device (like Core ML). For the former option, ML Kit offers six base APIs with pertained models such as Image Labeling, Text Recognition, and Barcode Scanning. For the latter option, ML Kit offers lower accuracy but more security to user data, compared to the cloud version.

If you are interested in the mobile ML landscape, feel free to check out this collection curated by the FritzAI team. Additionally, FritzAI itself is an ML platform for mobile developers that provide pre-trained models, developer tools, and SDKs for iOS, Android, and Unity.

Embedded Frameworks

From portable medical devices to automated delivery drones, intelligent edge solutions demand advanced inference to solve complex problems. But these devices can’t rely on network connections back to the data center. They need inference performance in a low-power, small form factor onboard. The best solution in the industry at the moment is the NVIDIA Jetson platform.

It is powered by NVIDIA GPU and supported by the NVIDIA JetPack SDK — which includes TensorRT for optimizing deep learning models for inference and other libraries (computer vision, accelerated computing, multimedia).

Depending on specific performance and budget needs, you can choose between different Jetson hardware solutions, which all share the same architecture and SDK, allowing for one code base and seamless deployment across the entire product portfolio.

Model Pruning and Quantization

Both pruning and quantization are model compression techniques that make the model physically smaller to save disk space and make the model require less memory during computation to run faster.

Pruning removes part of a model to make it smaller and faster. A popular technique is weight pruning, which removes individual connection weights. This technique is similar to the human brain’s development when different connections are strengthened while others die away. Another approach is neuron pruning, which removes entire neurons. This translates to removing columns or rows in weight matrices.

Quantization decreases the numerical precision of a model’s weights. In other words, each weight is permanently encoded using fewer bits. A straightforward method is implemented in the TensorFlow Lite toolkit. It turns a matrix of 32-bit floats into 8-bit integers by applying a simple “center-and-scale” transform to it: W_8 = W_32 / scale + shift (scale and shift are determined individually for each weight matrix). This way, the 8-bit W is used in matrix multiplication, and only the result is then corrected by applying the “center-and-scale” operation in reverse.

An excellent case study of model pruning is MobileNet, which performs various downsampling techniques to a traditional ConvNet architecture to maximize accuracy while being mindful of the restricted resources for a mobile or an embedded device. This analysis by Yusuke Uchida explains why MobileNet and its variants are fast.

Knowledge Distillation

Knowledge distillation is a compression technique in which a small “student” model is trained to reproduce the behavior of a large “teacher” model. The method was first proposed by Bucila et al., 2006 and generalized by Hinton et al., 2015. In distillation, knowledge is transferred from the teacher model to the student by minimizing a loss function in which the target is the distribution of class probabilities predicted by the teacher model. That is — the output of a softmax function on the teacher model’s logits.

So how do teacher-student networks exactly work?

The highly-complex teacher network is first trained separately using the complete dataset. This step requires high computational performance and thus can only be done offline (on high-performing GPUs).

While designing a student network, correspondence needs to be established between intermediate outputs of the student network and the teacher network. This correspondence can involve directly passing the output of a layer in the teacher network to the student network, or performing some data augmentation before passing it to the student network.

Next, the data are forward-passed through the teacher network to get all intermediate outputs, and then data augmentation (if any) is applied to the same.

Finally, the outputs from the teacher network are back-propagated through the student network so that the student network can learn to replicate the behavior of the teacher network.

A well-known recent case study of applying knowledge distillation in practice is Hugging Face’s DistilBERT, which is a smaller language model derived from the supervision of the popular BERT language model. DistilBERT removed the toke-type embeddings and the pooler (used for the next sentence classification task) from BERT while keeping the rest of the architecture identical and reducing the number of layers by a factor of two. Overall, DistilBERT has about half the total number of parameters of the BERT base and retains 95% of BERT’s performances on the language understanding benchmark GLUE.

Interchange Format

The result of any trained deep learning algorithm is a model file that efficiently represents the relationship between input data and output predictions. Most often, these models exist in a data format such as HDF5 or Pickle. Usually, you want these models to be portable so that you can deploy them in environments that might be different than where you initially trained the model.

The Open Neural Network Exchange (ONNX for short) is designed to allow framework interoperability. The dream is to mix different frameworks, such that frameworks that are good for development (PyTorch) don’t also have to be good at inference (Caffe2). The idea is that you can train a model with one tool stack and then deploy it using another for inference/prediction. ONNX is a robust and open standard for preventing framework lock-in and ensuring that your models will be usable in the long run.

5 — Monitoring

It is crucial to know not just that your ML system worked correctly at launch, but that it continues to work correctly over time. Now that the model is live, you need to understand how it performs in production and close the data feedback loop. It is crucial to monitor serving systems, training pipelines, and input data. A typical monitoring system can raise alarms when things go wrong and provide the records for tuning things.

Let’s review the specific metrics to monitor throughout an ML system, as recommended by Google.

1 — Dependency changes result in notifications:

Partial outages, version upgrades, and other changes in the source system can radically change the feature’s meaning and thus confuse the model’s training or inference, without necessarily producing values that are strange enough to trigger other monitoring.

Make sure that your team is subscribed to and reads announcement lists for all dependencies, and make sure that the dependent team knows your team is using the data.

2 — Data invariants hold in training and serving inputs:

You want to measure whether data matches the schema and alert when they diverge significantly.

In practice, careful tuning of alerting thresholds is needed to achieve a useful balance between false positive and false negative rates to ensure these alerts remain helpful and actionable.

3 — Training and serving features compute the same values:

To measure this, it is crucial to log a sample of actual serving traffic. For systems that use serving input as to future training data, adding identifiers to each example at a serving time will allow direct comparison; the feature values should be perfectly identical at training and serving time for the same example. Important metrics to monitor here are the number of features that exhibit skew, and the number of examples exhibiting skew for each skewed feature.

Another approach is to compute distribution statistics on the training features and the sampled serving features and ensure that they match. Typical statistics include the minimum, maximum, or average values, the fraction of missing values, etc. Again, thresholds for alerting on these metrics must be carefully tuned to ensure a low enough false positive rate for an actionable response.

4 — Models are not too stale:

For models that re-train regularly (e.g., weekly or more often), the most obvious metric is the age of the model in production.

It is also essential to measure the age of the model at each stage of the training pipeline to quickly determine where a stall has occurred and react appropriately.

5 — The model is numerically stable:

Explicitly monitor the initial occurrence of any NaNs or infinities.

Set plausible bounds for weights and the fraction of ReLU units in a layer returning zero values, and trigger alerts during training if these exceed appropriate thresholds.

6 — The model has not experienced dramatic or slow-leak regressions in training speed, serving latency, throughput, or RAM usage:

It is useful to slice performance metrics not just by the versions and components of code, but also by data and model versions.

Degradations in computational performance may occur with dramatic changes (for which comparison to the performance of prior versions or time slices can be helpful for detection) or in slow leaks (for which a pre-set alerting threshold can be beneficial for detection).

7 — The model has not experienced a regression in prediction quality on served data: Here are some options to make sure that there is no degradation in served prediction quality due to changes in data, differing code paths, etc.

Measure statistical bias in predictions, i.e., the average of predictions in a particular slice of data.

In some tasks, the label is available immediately or soon after the prediction is made (e.g., will a user click on an ad). In this case, we can judge the quality of predictions in almost real-time and identify problems quickly.

Finally, it can be useful to periodically add new training data by having human raters manually annotate labels for logged serving inputs. Some of this data can be held out to validate the served predictions.

Overall, it is important to monitor the business uses of the model, not just its statistics. Furthermore, it is important to be able to contribute failures back to the dataset. This is achieved either by collecting more real data or by adding a human in the loop to analyze the new data captured from production, and curate it to create new training datasets for new and improved models.

Conclusion

If you want to get a nice summary of this piece, take a look at section 4 of this GitHub repo on Production Level Deep Learning, created by Alireza Dirafzoon (another Full-Stack Deep Learning attendee). If you skipped to the end, here are the key takeaways:

Test everything that can be tested via unit and integration tests.

Use a continuous integration workflow to ensure rigorous testing.

Use a Docker container to run the tests and package up your code.

Choose the appropriate deployment tools for your serving system.

Monitor the health of your system by collecting statistics on the model performance on live data.

As machine learning continues to evolve and perform complex tasks, so is evolving our knowledge of how to manage and deliver such applications to production. Choosing how to deploy predictive models into production is quite a complicated affair. There are different ways to handle the lifecycle management of the predictive models, different formats to store them, multiple ways to deploy them, and a very vast technical landscape to pick from.

By following the guidelines shared in this article, as a machine learning engineer, you should be able to: (1) Collaborate seamlessly with engineering and DevOps to build ML-powered applications that can provide real business impact; and (2) Scale ML initiatives quickly by converting their true potential into business processes and systems. Big enough of an incentive, is it?