The 82nd episode of Datacast is my conversation with Dr. Chen-Ping Yu—the co-founder and CTO of Phiar, a company that is bringing human-like perception to every vehicle with their advanced lightweight spatial AI.

Our wide-ranging conversation touches on his academic research in computer vision and cognitive science at institutions such as RIT, Penn State, Stony Brook, and Harvard; his current journey with Phiar building a complete Visual Mobility platform; lessons learned as a first-time founder going through YC, fundraising, hiring; and much more.

Please enjoy my conversation with Chen-Ping!

Listen to the show on (1) Spotify, (2) Apple Podcasts, (3) Google Podcasts, (4) TuneIn, and (5) iHeartRadio.

Key Takeaways

Here are the highlights from my conversation with Chen-Ping:

On Studying at RIT

RIT was not a big research school. Instead, they emphasize practical education with a co-op program, in which students are required to work at companies for a minimum of 5 semesters as part of the graduation requirements. Towards the end of my Bachelor’s degree, I enrolled in more specialized courses such as AI and computer vision and broadened my view about ML-related topics.

Additionally, I had done a co-op at the University of Rochester’s Computational Neuroscience lab, where I wrote software for their experiments to model human-visual perception. I thought it would be interesting to combine computer vision and human vision modeling into a single project. Therefore, I proposed to my professor at RIT and my co-op advisor at U-of-R to focus my Master’s thesis on modeling human visual perception.

My MS thesis was a computational method of modeling the biological visual cortex. At the time, we were not modeling human visual perception; we were modeling monkey’s. We recorded the neurons’ firing rate of the monkey’s visual cortex neurons by presenting some visual stimuli to let the monkeys see and observing how the neurons reacted to such stimuli. Then, we would collect the data and attempt to build computational models capable of modeling these data. Our model was based on a mixture of a Gaussian model and a neural network, trained using monkeys’ neuronal activation profile and used to predict how the neural network would process if given different kinds of visual stimuli.

On Studying at Penn State

While RIT focuses on the practical aspect of engineering, Penn State is a primarily research-focused school. When I got to Penn State, I started taking classes in pattern recognition, computer vision, statistical machine learning, etc. I realized that I knew nothing about the theoretical foundations of statistics, probabilities, and linear algebra. I would almost put it as a culture shock when I got to Penn State, which opened me up to the major league of computer vision and machine learning.

My work at Penn State was primarily focused on brain tumor segmentation. During that time, I worked extensively with neurologists from the Penn State Hospital to go through all the brain MRIs and understand what brain tumors look like in order to design algorithms that automatically segment brain tumors.

I remember I was introduced to this project, and my advisor, at the time, asked me to become the expert in brain tumor segmentation in the next three months. I was thinking: “Wow, there are so many people working on brain tumor segmentation. I just started my study. How am I supposed to beat all the experts out there and achieve state-of-the-art in 3 months?” Then I realized that’s what research is all about: building state-of-the-art methods on top of everybody else’s.

At the time, I understood how computer vision is about from a non-biologically-inspired angle only. During a lab meeting, a lab-mate presented his blob detection method for crowd counting and object tracking. I thought that brain tumors also look like blobs. If he could use a blob detector to detect and track people, maybe I could use a blob detector to detect brain tumors as a rough localization and fine-tune their shape afterward. That formed my main research direction to design a blob detection-based brain tumor segmentation method.

On Getting a Ph.D. from Stony Brook

Given my academic background at RIT and Penn State, I was interested in Ph.D. opportunities that heavily tied with biologically-inspired methods for modeling computer vision and computational models of biological vision. Stony Brook took an interest in me because my Stony Brook advisors (Dr. Gregory Zelinsky and Dr. Dimitris Samaras) were collaborators from the psychology and the computer science department. I joined both of their labs and worked in computer vision and psychology. I published in computer science conferences like CVPR, ICCV, NeurIPS, ICML, as well as psychology conferences like Journal Vision, Psychological Science, Visual Cognition. It was very fulfilling to me because the methods I came up with could be applied in both directions. These two paths were not exactly separate but were synergistic and helped diversify my time at Stony Brook.

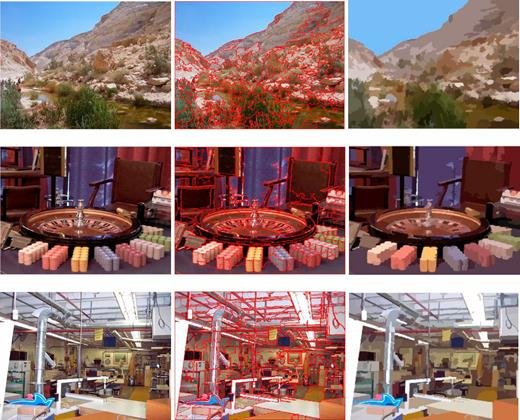

For my Ph.D. work, on the computer vision side, I focused on an unsupervised way of learning how to segment an image. Once the image has been segmented, it’s broken into parts that are visually similar to each other, what we call “proto-objects.” Proto-objects mean that there could be object parts or things. In many natural images, an object’s notion is difficult to define. That’s why we thought then that, instead of using objects to quantify things in an image, proto-objects could be the right level of detail (regions of similar visual features). I came up with an unsupervised algorithm that can segment the image into proto-objects. The method is fast, accurate, and applicable to different things.

Another part of my research was to understand how humans understand object categories and look for things in an image. We were trying to define how a category object can be represented. Most AI methods are trained to learn from both positive examples and negative examples. But for humans, a lot of times, we learn only with positive examples. Ultimately, we came up with category-consistent features, visual features that describe a certain category. Then, we use such features computationally to represent what a category might look like.

On Post-Doc Research at Harvard

After my Ph.D., I was deciding on what to do next. At the time, I was interested in continuing through academia. I applied for a postdoc position at Harvard and continued working on brain-inspired computer vision. At Harvard, I built a deep neural network that was as closely designed to the human visual cortex as possible. At a high level, I tried to understand how neurons are organized and built that organization as an architectural principle of a deep neural network. Opposite to today’s deep learning (in which the goal is to maximize accuracy), my goal was to maximize the similarity of the network architecture to the human’s visual cortex. Based on that architecture, I would train the network to see what kind of information it could learn. That’s what Map-CNN is all about.

On Founding Phiar

At Harvard, I realized how hard it was to drive in Boston. The roads and traffic were so complex that I still made wrong turns even when I was driving and reading Google Maps. Because the map is 2D, even though it’s telling me to make a particular turn, there might be ten different roads tolling into each other on that map. I got so fed up and thought: “Why can’t someone show me a live video feed of what’s in front of me and overlay my route directly onto that feed?” I would know exactly where to go, rather than reading the map, because my phone was already mounted with a camera facing forward. Then, I realized my background is in computer vision. It’s an interesting idea that I can build a company around because it came from a real pain point that I was facing.

On Phiar’s Spatial AI Engine

We originally wanted to build our AR navigation application directly on the smartphone because we felt that you could use our application as long as you have a smartphone, no matter what kind of car you drive. Then, we realized what we were building was actually self-driving without driving the car.

Imagine if you are able to overlay AR route onto the video feed that is conforming to the lane (at which lane is supposed to drive onto), then if we have control of your car, we can technically just drive your car to follow the path that we have planned for you in AR. For our AR navigation, we are not driving a car, but we have to do everything else before that (facial understanding, road understanding, knowing which lane you are in, how many lanes there are, estimating how the car is moving, your heading direction, your speed, path planning, etc.). That requires a lot of perception and road understanding for us to compute what your path is supposed to be in front of you.

Making all that work on a small phone-grade processor was unheard of at the time because smartphones, while they are powerful, were not meant to support cutting-edge deep learning-based processes. Most deep learning requires GPUs or multiple GPUs to run; even then, they may not run in real-time. That’s why with my background in efficient computer vision, we started to focus on the technical side of enabling our navigation with a very powerful AI system that can understand the road, but at the same time is very efficient. That’s why we developed an ultra-lightweight Spatial AI engine that powers our AR application product.

On Phiar’s Visual Mobility platform

Our current product is AR navigation for driving. We had to switch from a smartphone-based application to automotive facing last year because we found that the cars were coming out with bigger screens, which would be great displays for AR navigation. The carmakers themselves were looking for AR navigation to be shipped in their vehicles. Therefore, we have been working on AR navigation inside the car as software and the AI engine that powers the AR navigation as a separate product. We called that AI engine a Visual Mobility platform because we want it to be used by developers to build their own applications on top of it. It’s similar to Unity, where Unity is a 3D engine that you can build 3D games using it. We allow people to build visual applications using our AI engine, including many different use cases.

On Being A First-Time Founder

As a first-time founder, everything was a learning experience for me. We don’t know anything. There were just no prior data points for us. Therefore, the most practical advice I would give people is to soak in all the experiences that they have been through. All of that will be very helpful for your next venture. In the meantime, it’s important to create a company solving a real pain point, create a scalable business, and make sure that the product you are building is something that people actually want to use.

For first-time founders, whoever is willing to invest would be the right investor for you. If you are lucky to get multiple term sheets, then you can do due diligence by getting references about investors. But again, most references are positive things anyway, and you would never know until you actually work with the person. Then, you will be able to know what to do better the next time around.

On Hiring

Hiring is so hard. The reality for a startup in the Bay Area is that it’s never easy to hire, especially for AI talent. You have the big tech companies next to you trying to grab the same type of talent. Most of the time, when the talent is excellent, even if they have a lot of passion for your company, ultimately, when they get offers from these big companies, the numbers can outweigh any of their passion in startups.

For us, we just have to cast an extremely wide net to find the very few people who are just extremely passionate about startups to be willing to take the chance to work with us. Of course, we believe tremendously about the future potential of our company.

On Being A Researcher And A Founder

Problem-solving is the big similarity between these two professions. A researcher’s job is to solve a problem with a new approach that can give you even better results than before. You have to solve it in a limited time because you have to publish within conference deadlines. That means I was trained to be very cautious in time management and organizational priorities. I have to be able to know what to prioritize and finish something within the time limit.

It’s a lot like the cooking show “Chopped.” You have 30 minutes to come up with a dish, then the judges will give you some surprising ingredients. There will always be issues that arise during that competition. You may have broken things or burned the food while the time is still ticking. You still have to make sure you deliver in a timely manner.

Ultimately, a startup is the same. You have a limited amount of funding and have to deliver. It doesn’t matter if you have enough people or not. You have to figure out a way to make things work. The big difference is that there is also the operational side in a startup that a researcher doesn’t need to take care of. Thus, being a founder has been a great learning experience for me.

Show Notes

(01:47) Chen-Ping shared his upbringing growing up in Taiwan and going to boarding school in the US at the age of 14.

(04:42) Chen-Ping got his Bachelor’s and Master’s degrees in Computer Science from RIT back in the early-to-mid 2000, in which he did academic research in computational neuroscience.

(08:18) Chen-Ping walked through his MS thesis at RIT, designing and implementing a computational model of neurons from the visual cortex’s medial superior temporal area.

(10:18) Chen-Ping talked about the academic culture shock of pursuing his Master’s degree in Computer Science and Engineering at Penn State.

(13:47) Chen-Ping walked through his MS thesis at Penn State, proposing a statistical asymmetry-based automatic brain tumor detection from 3D MR images.

(18:35) Chen-Ping discussed the thread of his research as a Ph.D. student at Stony Brook, where he worked at the Computer Vision Lab and the Eye Cog Lab.

(23:19) Chen-Ping unpacked his Ph.D. dissertation at Stony Brook called computational models of visual features: from proto-objects to object categories.

(28:54) Chen-Ping went through his internship experience at Riverbed Technology and Shutterstock.

(30:20) Chen-Ping dissected the development of a neuro-inspired deep convolutional neural network called Map-CNN for modeling human early visual information processing during his time as a Postdoc at Harvard’s Cognitive and Neural Organization Lab.

(32:14) Chen-Ping mentioned research areas at the intersection of computer vision and cognitive vision that he is excited about.

(33:33) Chen-Ping shared the story behind the founding of Phiar with James Briscoe, an ex-classmate from RIT, and Ivy Lee, an ex-colleague from Shutterstock.

(36:33) Chen-Ping discussed technical challenges with developing an ultra-lightweight Spatial AI engine that allows any vehicle to perceive its surroundings using a camera that can run in real-time at the edge on a commodity automotive computing platform.

(39:36) Chen-Ping unpacked the key features of a complete Visual Mobility platform, including automobile integration, AR navigation, digitized environment, smart parking, 3rd-party integration, and reality-as-a-service.

(41:16) Chen-Ping shared details around Phiar’s ultra-efficient monocular depth estimation AI that runs efficiently on a mobile phone and achieves SOTA accuracies on the benchmark KITTI dataset.

(43:16) Chen-Ping revisited his experience going through the Y-Combinator incubator in the summer of 2018.

(44:27) Chen-Ping shared high-level fundraising advice for first-time founders.

(46:30) Chen-Ping talked about strategies he found useful to identify the right client partnerships for Phiar.

(48:10) Chen-Ping shared valuable hiring lessons learned at Phiar.

(51:37) Chen-Ping reflected on the difference between being a researcher and a founder.

(53:43) Closing segment.

Chen-Ping’s Contact Info

Phiar’s Resources

“Phiar Secures $12M Series A and Names Google Head of Android Automotive Platforms as CEO” (Sep 2021)

Mentioned Content

People

Books and Papers

“Zero To One” (by Blake Masters and Peter Thiel)

“Modeling Clutter Perception using Parametric Proto-object Partitioning” (NIPS 2013)

“Modeling visual clutter perception using proto-object segmentation” (June 2014)

“Generating the features for category representation using a deep convolutional neural network” (Sep 2016)

“Map-CNN: A Convolutional Neural Network with Map-like Organizations” (Aug 2017)

“Mid-level visual features underlie the high-level categorical organization of the ventral stream” (Sep 2018)

About the show

Datacast features long-form, in-depth conversations with practitioners and researchers in the data community to walk through their professional journeys and unpack the lessons learned along the way. I invite guests coming from a wide range of career paths — from scientists and analysts to founders and investors — to analyze the case for using data in the real world and extract their mental models (“the WHY and the HOW”) behind their pursuits. Hopefully, these conversations can serve as valuable tools for early-stage data professionals as they navigate their own careers in the exciting data universe.

Datacast is produced and edited by James Le. Get in touch with feedback or guest suggestions by emailing khanhle.1013@gmail.com.

Subscribe by searching for Datacast wherever you get podcasts or click one of the links below:

If you’re new, see the podcast homepage for the most recent episodes to listen to, or browse the full guest list.