The 75th episode of Datacast is my conversation with Michel Tricot — the co-founder and CEO of Airbyte, whose mission is to make data integration pipelines a commodity.

Our wide-ranging conversation touches on his Computer Science education in France, his move to San Francisco for a startup job, his work building the modern data stack at LiveRamp and the engineering architecture of the rideOS ride-hail platform, his current journey with Airbyte, and his high-level perspectives on open-source pricing, community building, product prioritizing, hiring, and fundraising.

Please enjoy my conversation with Michel!

Listen to the show on (1) Spotify, (2) Apple Podcasts, (3) Google Podcasts, (4) TuneIn, (5) RadioPublic, and (6) Stitcher.

Key Takeaways

Here are highlights from my conversation with Michel:

On Studying in France

In France, when entering school, you have two years of intensive scientific studies with a lot of math, physics, biology, etc. Then you have three years of applied computer science (CS), where you dive into programming. I’d say the first two years were the continuation of high school for me. But the last three years were just amazing. It was a great way to pursue my passion for CS by going deep into subjects like building a Kernel or a Shell. Besides these applied courses, some classes taught me how to build a company, manage a team, or be a leader in CS. I’d say my favorite courses were the ones about databases — learning how to design database architectures at both the high and low-level.

On Working at FactSet Research Systems

FactSet has many specialized databases for specific dimensions of finance. I was working with the estimate database — gathering all the analysts’ estimates from companies' quarterly results. This data was just all over the place. It comes in XML files, PDF files, or email. How to bring this data? How to collect it? How to make it queryable by traders and financial analysts? That was my first experience learning to build data pipelines and make the data available to the end-users.

There are regulatory challenges: Since we were able to gather all this data, we needed to partition how the data got corrected so that (1) there was no risk for insider work to be done and (2) the data happened and was available at the same time for everyone. There was a lot of permission around the data, and not everybody was allowed to have access to some of the data.

On Moving to San Francisco

In 2011, I needed to decide whether I wanted to stay in France or go back to the US. I reached out to friends from college that I was close to and asked them: “What are you guys doing in San Francisco?” A good friend was working at a company called Rapleaf. I talked to him and liked the domain that the company focused on (data management). I reached out to them and followed the interview process. It went by very fast. I got in touch with them in May, and by June, I was on the plane to do onsite interviews. The whole experience was great, and that’s something I’m trying to replicate on how to conduct interviews at Airbyte these days.

I was a senior software engineer there. I never really worked on the Rapleaf product per se because, at that time, there was already a spin-up between Rapleaf and LiveRamp. As a result, I focused most of the time on the LiveRamp product. It was basically about setting up the data integration pipelines: how to get data from customers, how to fit that data into the fragmented ad-tech ecosystem, how to activate the customer data, etc. So that’s my core responsibility: data integration and data management.

On The Data Stack at LiveRamp

At LiveRamp, I was leading a team called Distribution. The goal of that team was to deliver data as quickly as possible. The North Star of the team was that “We should never be the bottleneck.” We wanted to build the data infrastructure in a way that we can firehose all the data to our partners and adapt based on how quickly they could ingest the data. Let’s say a product or a business person asks for engineering time to extract insights from the LiveRamp identity graph (to understand how it behaves). It is costly in terms of engineering time to do this analysis. Given that much friction, the questions asked tend to stay at the surface level.

My team set up the first modern data stack, which is basically what I’m working on at Airbyte today. The goal was to enable roles that are not engineers or less technical to access data and get insights from it. Back then, there was no Snowflake or BigQuery, so we used Redshift and set up the whole thing. Once the relevant data existed in Redshift, we had so many product managers playing with it. The first project saved us over $500k/month of expenses in paying publishers. Overall, it’s about optimizing and understanding if there were issues with how we were matching devices. That significantly impacted how we thought about our publisher network — how to pay them, leverage them, detect misbehaving ones, etc.

It took us maybe one quarter (3 months) to get the first MVP of the data stack and train one or two PMs on how to use Redshift. We were also learning about RedShift on our own, which was hard to manage as the number of users increased. After that, we would have one or two projects dedicated to maintaining and improving the pipeline and the data stack for every quarter. It was expensive, but in the end, we enabled new people to make their own analyses and saved engineering time.

On Hiring Engineering Talent

You need to define the values of your engineering team early on. At LiveRamp:

Engineers need to be good communicators: You have to be able to explain to non-engineers and engineers in an understandable way.

Engineers need to pay attention to details: How do you think this piece of code will be used? What kinds of input will it get? How will the code evolve over time?

If you hire people with these traits, then you don’t need to be behind their backs all the time. You can trust them to do what they say they will do and own how they are going to do it. Trusting and empowering people is the most important thing, especially in engineering. Engineering is a bit of creativity, so you want to make sure that you give the freedom for people to experiment and own the shape of their projects. For that to happen, you need to bring the candidates who can do it. Not everyone can do that.

When you have a team like that, as a manager, you should stay away from providing solutions but always provide the right context — whether business context around the project or internal context about the project. Then, let people understand the context(s) and be aligned with them. I believe the most important thing for fostering strong innovation is giving people freedom and hiring people who can do something with that freedom.

On The Engineering Architecture of rideOS

A ride-hailing application has the backend and the frontend.

The front end is an app on your phone that is dependent on the service you are building. This part is open-source (i.e., the SDK for Android and iOS) in which people can take and customize for their application.

The routing API was the foundational piece of rideOS, which is “how do you go from point A to point B.” Because we also focused on self-driving cars, we had to consider constraints (roads/streets where cars cannot go, maneuvers that cars cannot do, etc.). The routing engine that we were building took into account such constraints, in addition to real-time data ingestion, to fulfill all the car's requirements.

The fleet planner API was about how to manage the fleet. The route is one-car management, while the fleet is multiple-car management. How do you put cars in the right way in the right place so that if there is demand in that area, you can fulfill that demand faster?

The ride-hailing API is about the ride-hailing use cases themselves, and this API uses the fleet planner API and the routing API behind the scene.

When you do ride-hailing at scale, optimization is crucial because it’s a low-margin business. Every cent that you can get with volume will bring your revenue up, so you want to increase that particular piece of the price. For that to happen, you want to dispatch the right car to the right person. A lot of that was about optimization — getting percentage gain at that scale makes your network super-efficient. For that, you need the data. I was integrating the data into the ride-hailing engine (and, as a result, the routing and fleet planner engines) to make sure that we make the fastest decision there.

On Founding Airbyte

I left rideOS in July 2019. For six months until January 2020, I talked to as many people as possible while also looking for a great co-founder, and John Lafleur is a great co-founder. We brainstormed a ton of ideas together. Every idea sounds good on paper. But as we got deeper into it, we realized that some ideas were terrible. When an idea passed the filters, we needed external validation to determine whether it made sense to work on them. Eventually, we decided on data integration, given our past working experience.

We applied to Y Combinator in November 2019 and got in their Winter 2020 cohort. While at YC, we continued to do user interviews. I think before building any product, you need to talk to as many potential customers as possible. What you have in your head is generally not enough, and you need external inputs to understand that you are indeed solving the right problems. At YC, we refocused our idea to be more marketing-oriented and built a product that got a ton of traction.

When COVID-19 hit, a lot of companies started removing their marketing budget. We began not to be able to move forward with new deals. That’s when we realized that what we were building was more a good-to-have than a need-to-have.

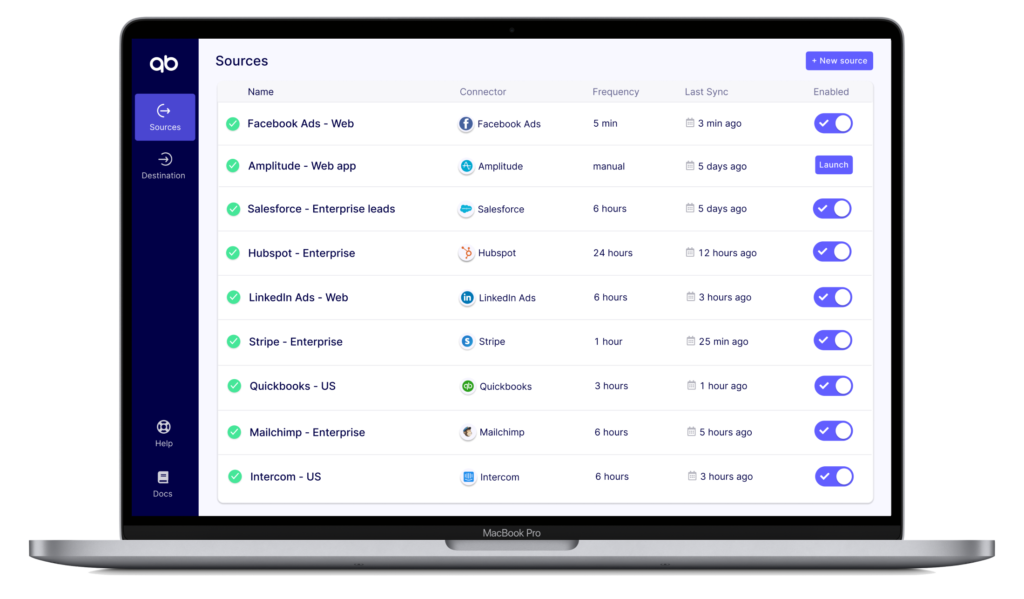

In July, we pivoted and re-centered our product to be more data integration at the data engineering level. We went head down that direction of what Airbyte is known as today. We released the first version at the end of September 2020. Since then, we have had a huge adoption, so I am thrilled that we made the pivot.

I won’t lie to you; it was hard to make that pivot. My daughter told me that I might have gotten some gray hair at that time. But it was better for the team and our investors to do that at the end of the day.

On Doing User Interviews

We basically automated the outreach. We created a list of companies and extracted relevant roles of the employees in those companies from LinkedIn. Then we started outreaching to them.

The response rate is going to be low. If you have a list of 10 people, then maybe one person will reply to you. So you need to have a large number of people that you can reach out to because only a few percentages will actually convert.

Internally, we targeted multiple roles such as data engineers, senior data engineers, and other data roles that gravitate around data. This gave us different perspectives: data engineer is the producer, while data analyst or data scientist is the consumer. They have different problems and different perspectives on the problem. I think it’s imperative to keep a foot into understanding what the market needs in order to cover the gap in our product offering.

On The Future of Data Integration

We are in the new age of data, in which warehouses have enabled more people to work with data. Before, a lot of data work required data engineering expertise. Once you start opening the door of warehouses, you suddenly have more people with different objectives than you who might want to experiment with data. If the engineering bottleneck always blocks them, then you will never achieve any innovation.

A lot of the current data integration offerings are not empowering these new roles. While warehouses are popular, there is still a missing link: how to get data into the warehouses. Solutions like Fivetran or Stitch have frictions in terms of data security because they are cloud-based services. You need to have your internal data transited to somewhere else before going into your warehouse. For organic adoption of a product, that’s generally a problem. Some companies just don’t want to get the data elsewhere before putting it into their own infrastructure.

Another problem is that you have so many different places where you can obtain data. If you build 50 connectors, you might cover 60% of the use cases and have 40% left. While talking with different companies, we noticed that everyone was working on this 40-percent and doing the same thing repeatedly. It’s a huge drain for the engineering team to build and maintain these connectors.

Airbyte’s goal is to fix the problem of repeatedly building connectors by sharing the load of maintaining these connectors with the community. Connectors become commoditized and accessible to everyone. If this connector becomes popular, you can get the community to help you maintain it and share the burden. You can customize the connector as needed instead of starting one from scratch.

On Building Data Connectors

Data integration means carrying the data from point A to point B. Basically, you need to make sure that the data can translate. What helps with the translation is a protocol for exchanging data. The protocol is very low-level with some features, and it will be very costly to build against the protocol. If you need to address 10,000 connectors, you will write a ton of code for those 10,000 connectors.

Instead, you can build abstractions on top of your protocol.

If an abstraction can’t address specific use cases, you can always go down to the protocol level. Your goal is to lower the amount of code that is necessary to be able to connect the data. The less code you have, the easier the maintenance is going to be.

The abstraction should also be tailored to the particular use case. If you have a synchronous API, you can create an abstraction just for that. It means that now you can build a connector in two hours instead of three days.

By building this framework, you can also build in features that make it easier to build. How to handle errors? How to communicate with Airbyte? The day an abstraction stops working, you can create a new one that allows you to address that particular abstraction family. If you can’t do an abstraction, you can always go down to the root of your onion (the protocol) and build a role integration.

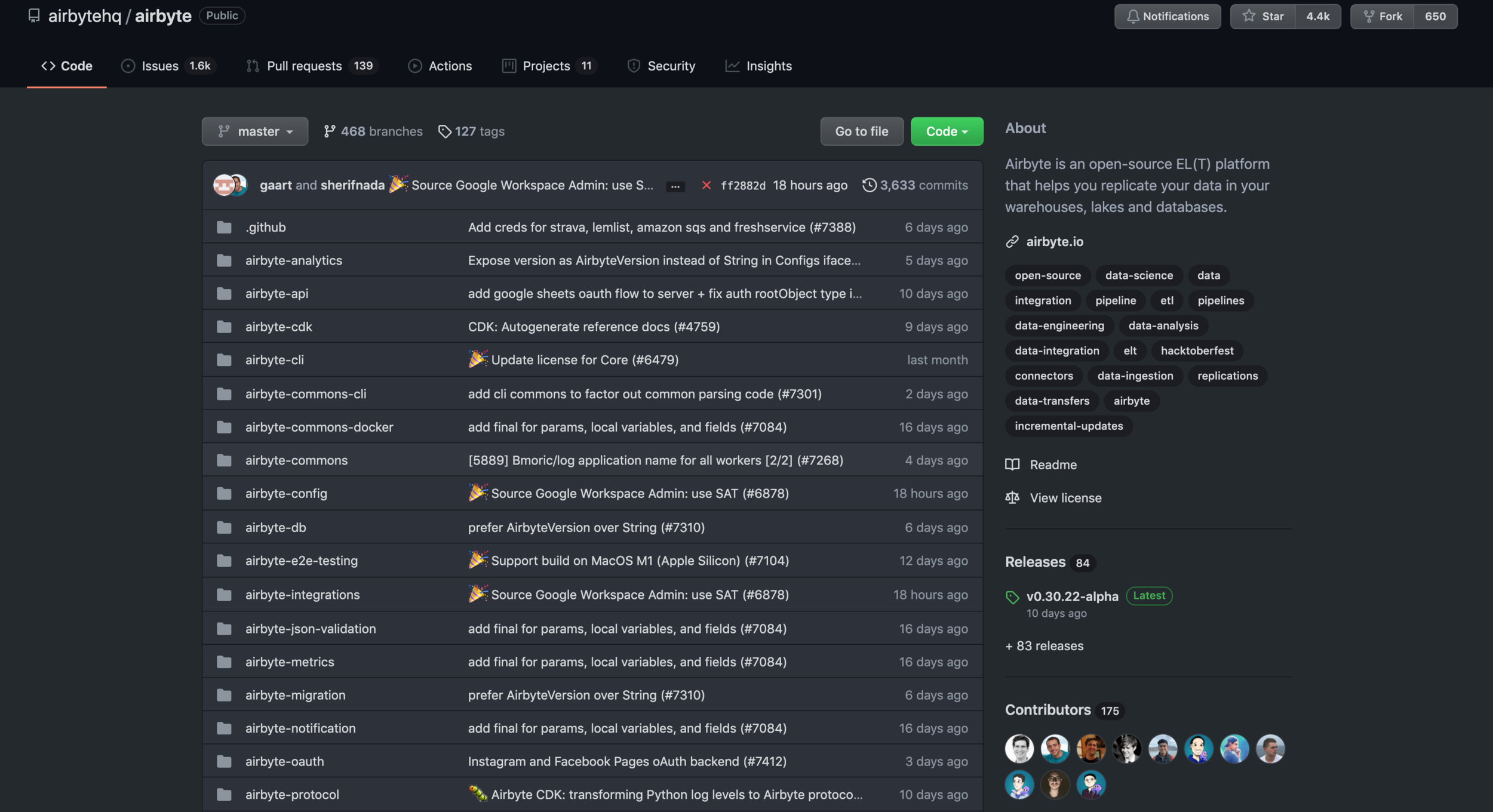

On Open-Source Product Roadmap

In an open-source project, the community helps build and maintain connectors. We need to make sure that we empower the community to make decisions on connectors and that these decisions gather under Airbyte. The first few developments will be low-quality, but then with iterations, they will become better.

We prioritize Airbyte’s roadmap based on conversations with practitioners and feedback from the open-source community. A substantial positive point of having and working with the community is the access to the internal needs of companies — which are usually very hard to discover). In general, we prioritize features that can address different personas and use cases.

On Open-Source Pricing Strategy

A standard pricing strategy today for any open-source solution is to have a hosted version of your product. Most companies want to test but not manage open-source products. If they decide to use your hosted version, you can price your product by volume or features.

Another pricing strategy is the open-core model. The community edition that you’ve created is always free. Every single feature that enhances the standard open-source project becomes a paid feature. Instead of charging per volume, you charge per feature or consumption.

I’m going to be transparent. We haven’t figured out the pricing yet, and we are focusing on the Community Edition. Generally speaking, anything beneficial to one individual should be free, while anything beneficial to an organization or a team should be chargeable.

On Community Management

For every single project, there is a cost of onboarding someone for us. How do you make the experience of contributing easy for someone new to the project? We have consciously invested a ton in the developer experience. For example, we have one-on-one sessions with them to walk through different parts of the project and the documentation that is relevant to what they want to do. Obviously, it took time from us as we continued to add more features. But at the same time, I think the community loves that. It’s has become easier to get into the project and start contributing connectors.

Although the number of GitHub stars is a vanity metric, it still plays a role in how much confidence you have in adopting a project. The metrics that we track internally at Airbyte are the number of people who deploy Airbyte, the number of people who replicate their data with Airbyte, and how often they replicate their data. We optimize for Airbyte deployments in production (as Airbyte becomes a part of their data stack). Every time someone tests us, we get in touch with that person to understand their needs and what might prevent them from using Airbyte in production.

On Hiring

There is always a risk when you hire someone because you might hire the wrong person who either won’t deliver or won’t fit your company culture. I recommend that whenever you start a company, try to hire people whom you have worked with in the past. You had basically interviewed them when you worked with them, and this makes managing people a lot easier since we already know each other.

The con of doing that, especially in engineering, is the bias. There won’t be a lot of diversity in these networks. You need to compensate for that at some point to make sure that you create a diverse team. That’s when you need to start recruiting from the outside. When interviewing, we look for their ability to own the outcome of every single project by digging into the details of their past projects.

Every engineer that we talked to has encountered a data integration problem in the past. So it is not a hard sell. They understand the pain point and why open-source is the right solution for solving this problem.

Having large fundraising rounds also helps because people look for some kind of stability in their careers.

On Fundraising

You should talk to partners at VC firms who understand your industry. You don’t want to sell a Calendar app to someone who invests in deep tech; you will waste your time. It’s about identifying which company gravitates in the same industry you operate in and then getting in touch with the VC partners who invest in their seed or Series A rounds. Cold emails tend not to work well, so find people who know them and can introduce you. Overall, stay focused and target the right partners at the right firm.

We avoid random meetings with VCs. It’s a big energy drain since it’s very different from what we do day-to-day. Thus, we try to group these meetings together. With this approach, we have the context in mind and become better at exposing the progress of our company.

Timestamps

(01:58) Michel went over his education studying at EPITA — School of Engineering and Computer Science in France.

(03:50) Michel mentioned his first US internship at Siemens Corporate Research as an R&D engineer.

(05:48) Michel discussed the unique challenges of building systems to handle financial data through his engineering experience at FactSet Research Systems and Murex.

(07:48) Michel talked about his move to San Francisco to work as a Senior Software Engineer at Rapleaf, focusing on scaling up data integration and data management pipelines.

(10:40) Michel unpacked his work building the modern data stack at LiveRamp.

(16:18) Michel shared valuable leadership and hiring lessons absorbed during his time as Liveramp’s Head of Data Integrations — fostering a strong culture of innovation and expanding the engineering organization significantly.

(19:03) Michel dived into how to interview engineering talent for independence, autonomy, and communication.

(20:56) Michel dissected the engineering architecture of the rideOS ride-hail platform (where he was a founding member and director of engineering).

(26:03) Michel told the founding story of Airbyte, whose mission is to make data integration pipelines a commodity (+ the pivot that happened during Airbyte’s time at Y Combinator).

(32:10) Michel explained the paint points with existing data integration practices and the vision that Airbyte is moving towards.

(35:07) Michel unpacked the analogy of Airbyte’s approach to building a connector manufacturing plant, which is to think in onion layers.

(39:13) Michel shared the challenges that are still hard for an open-source solution to address (Read his list of challenges that open-source and commercial software face to solve the data integration problem).

(40:28) Michel discussed how to prioritize product roadmap while developing an open-source project.

(41:59) Michel discussed pricing strategies for open-source projects (Airbyte’s business models entail both self-hosted and hosted solutions).

(44:17) Michel revealed the hurdles that Airbyte has overcome to find the early committers for their open-source project.

(47:53) Michel shared valuable hiring lessons learned at Airbyte.

(50:16) Michel shared fundraising advice for founders seeking the right investors for their startups.

(52:41) Closing segment.

Michel’s Contact Info

Mentioned Content

Airbyte (Docs | Community | GitHub | Twitter | LinkedIn)

Blog Posts

“The Hard Things About Pivoting” (July 2020)

“How Can We Commoditize Data Integration Pipelines” (Sep 2020)

“How to Build Thousands of Connectors” (Oct 2020)

“The Deck We Used to Raise Our Seed with Accel in 13 Days” (March 2021)

People

Jeremy Litz (Former CTO and Co-Founder of LiveRamp)

Tristan Handy (CEO of dbtLabs and Editor of the Analytics Engineering Newsletter)

Book

“High-Growth Handbook” (by Elad Gil)

Notes

My conversation with Michel was recorded back in April 2021. Since the podcast was recorded, a lot has happened at Airbyte! I’d recommend:

Looking over the deck that they used to raise a $26M Series A led by Benchmark.

Reading Michel’s take on Airbyte’s new OSS model and strategy to commoditize all data integration.

Diving into Airbyte Cloud, a hosted service that takes all of the features of the open-source version and adds hosting and management, on top of a number of additional support options and enterprise features.

Subscribing to Airbyte’s newsletter called Weekly Bytes and exploring Airbyte Recipes.

About the show

Datacast features long-form, in-depth conversations with practitioners and researchers in the data community to walk through their professional journeys and unpack the lessons learned along the way. I invite guests coming from a wide range of career paths — from scientists and analysts to founders and investors — to analyze the case for using data in the real world and extract their mental models (“the WHY and the HOW”) behind their pursuits. Hopefully, these conversations can serve as valuable tools for early-stage data professionals as they navigate their own careers in the exciting data universe.

Datacast is produced and edited by James Le. Get in touch with feedback or guest suggestions by emailing khanhle.1013@gmail.com.

Subscribe by searching for Datacast wherever you get podcasts or click one of the links below:

If you’re new, see the podcast homepage for the most recent episodes to listen to, or browse the full guest list.