Recently, I attended the All Tech Is Human event at the ThoughtWorks office in midtown Manhattan. It is an all-day ethical tech summit that consisted of technologists, academics, advocates, students, organization leaders, artists, designers, and policymakers. There was a mix of lightning talks, topical panels, strategy sessions, and informal networking with people in the tech change ecosystem. The event is organized by the organization with the same name All Tech Is Human — a catalyst and connector for tech change. In this blog post, I want to share the main points and key takeaways that I got out of attending the event.

1 — Lighting Talks Round 1

The first round of lightning talks started with Dr. Courtney Cogburn, an Assistant Professor at the Columbia University School of Social Work. Her work explores the potential of media and technology in eradicating racism and eliminating racial inequities in health. In her talk “Anti-Racism In Tech,” she mentioned the film 1000 Cut Journey, an immersive virtual reality experience of racism that premiered at the 2018 Tribeca Film Festival. She also discussed the 5 major roadblocks of fighting racism in tech: (1) People avoid talking about race, (2) People do not understand racism, (3) People focus on the individual designation, (4) People focus on the individuals rather than the systems, and (5) Diversity alone won’t fix racism. Her talk ended strongly with the argument that equality is not possible with anti-racist and anti-oppressive intentions.

The second talk came from Chris Wiggins, Chief Data Scientist at The New York Times and an Associate Professor of Applied Math at Columbia University. In his talk “What future statisticians, CEOs, and senators know about the history and ethics of data?”, Chris publicized a course he taught at Columbia called “Data: Past, Present, and Future.” The course includes the history of human use of data; functional literacy in how data are used to reveal insight and support decisions; critical literacy in investigating how data and data-powered algorithms shape, constrain, and manipulate our commercial, civic, and personal transactions and experiences; and rhetorical literacy in how exploration and analysis of data have become part of our logic and rhetoric of communication and persuasion, especially including visual rhetoric. Chris concluded the talk emphasizing that in order to find the future, it’s important to analyze past/present contests and powers. All the lectures, labs, syllabus, and resources are available to the public, so I would highly recommend you to check them out.

The third talk was delivered by Dr. Tracy Dennis-Tiwary, a Professor of Psychology and Neuroscience at Hunter College and the Graduate Center of the City University of New York. Her research focuses on stress and anxiety, as well as the interplay between digital tech and emotional well-being. Her talk “Screentime and Relationships” argued that it is dangerously easy to disappear into our phones and joint attention is very bad for our emotional wellness. This is a topic that is very popular these days in the press, and I have a fair share of exposure after reading Cal Newport’s “Digital Minimalism” and Nir Eyal’s “Indistractable.”

2 — Global Outlook for Responsible Tech

This panel is moderated by Chine Labbe, a Senior Editor at NewsGuard and host/producer of the podcast Good Code on ethics in digital technologies. The panelists include Anoush Rima Tatevossian (strategic communications advisor and consultant at the United Nations), Mona Sloane (sociologist and fellow at NYU’s Institute for Public Knowledge), and Mark Latonero (Research Lead for Human Rights at the Data & Society and a Fellow at Harvard Kennedy School’s Carr Center for Human Rights Policy).

Here are the major points that the panelists raised during this fireside chat:

Anoush talked about a recent report called “The Age of Digital Interdependence” that was created by the United Nations. She brought in examples ranging from a national health crisis in India to cyberattacks on global shipping. Referencing her years of experience leading a variety of UN’s initiatives, she said that global development of responsible technology must involve multi-stakeholders (technologists, economists, activists, politicians, etc.) and must be agile & dynamic. She emphasized the importance of understanding how other players in this space speak, and therefore there is a need for translators who can do so. Thus, we would probably have to reframe the notion of expertise to incorporate these translation skills into the digital workforce.

Mark’s work focuses on the social and policy implications of emerging technology and examines the benefits, risks, and harms of digital tech, particularly for vulnerable and marginalized people. Mark argued that human rights should serve as the North Star in any AI strategy because it is a ready-made solution that can be a blueprint regardless of any jurisdiction, status, or countries involved. He talked a bit about the “Human rights impact assessment” and how it can be incorporated into business decision-making. He strongly asserted that “Move Fast and Break Things” being deployed in the wild is highly irresponsible and arrogant. Talking about his research on digital identities for the refugees, Mark said that there already exists so much power imbalance when refugees attempt to enter new territory, and technology adds an additional layer that exacerbates this power struggle.

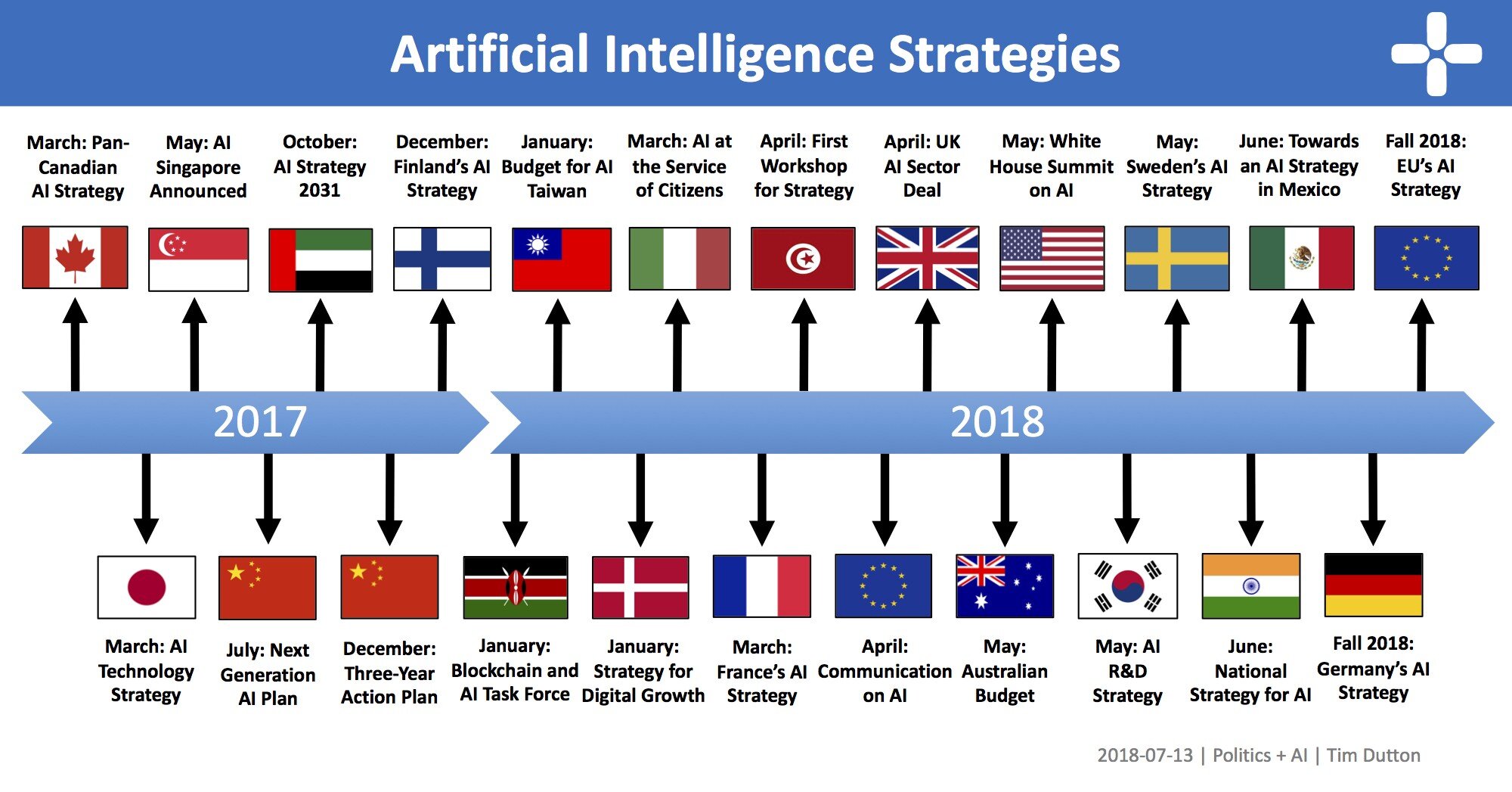

Mona’s work examines the intersection of design and social inequality, particularly in the context of AI design and policy, valuation practice, data epistemology, and ethics. During the chat, she talked about the notion of “National AI Strategy,” in which many countries are developing AI to compete in the global economy and strengthen national security. Because of this AI arms race, countries are using ethics to position themselves in this commercial and global space. When asked about her thoughts on political ads in social media platforms, she questioned the notion of well-intention by them and emphasized the importance of doing R&D along the line of consent and recidivism. Lastly, she tasked the university and educational system to be responsible to break down the academic “ivory tower” wall and develop translation skills (referring to Anoush’s point mentioned above).

3 — Tech and Policy

This panel is moderated by Allie Brandenburger, the co-founder and CEO of TheBridge. The panelists include Victoria McCullough (Director of Social Impact and Public Policy at Tumblr), Natalia Domagala (Data Policy, Strategy & Ethics at the Department for Digital, Culture, Media, & Sport in the UK), and Yael Eisenstat (Visiting Fellow at Cornell Tech in the Digital Life Initiative). A big part of this panel conversation concerned whether or not social media should be allowed to display political advertisements, as evidenced by recent events happening at Twitter and Facebook.

Here are the key takeaways that I got out from each of the panelists:

Given her past employment with the CIA and Facebook, Yael said that society at large needs to debunk what is allowed on the Internet. Moreover, given the recent change of Instagram’s ranking algorithm on its news feed (recency ranking to interest ranking), she argued that platforms that serve as digital curators should be regulated, and there is a real need to make the recommendation engine to be more transparent.

Bringing in her perspective from the UK, Natalia said that because of the upcoming election, there has been a community letter circling around asking Facebook and Google to not show political ads in the next 6 weeks. She also mentioned that the UK government has developed a white paper focusing on online harm, and is in the process of creating a code of conduct for regulation terms with social media platforms.

In her role at Tumblr, Victoria focuses on engaging and activating advocacy groups, activists, and other change-makers to tell powerful stories, catalyze engagement with the Tumblr community, and drive measurable impact. She shared that Tumblr has had a really tough time monetizing the ads on their platform because there exists no technical infrastructure to sort through the ads. Finally, she emphasized that there is a big need for digital citizenship skills, which can be enforced by the government.

4 — Lighting Talks Round 2

The second round of lighting talks started with Lisa Lewin, Managing Partner at Ethical Ventures, a New York City-based management consulting firm, where she leads large-scale org transformation for for-profit, nonprofit, and public sector clients. Lisa’s talk encouraged businesses to think beyond fiduciary duty and look at moral duty. She proposed very detailed actions that executives can do: draw bright lines between moral and immoral actions, demand ethics KPIs, establish a board ethics committee, ethics-test corporate strategies, and engage with employees and users.

The next talk came from Flynn Coleman, a writer, international human rights attorney, public speaker, professor, and social innovator. She is the author of a newly published book, A Human Algorithm: How Artificial Intelligence Is Redefining Who We Are, a groundbreaking narrative on the urgency of ethically designed AI and a guidebook to reimagining life in the era of intelligent technology. Her talk is called “How to Thrive in the Intelligent Machine Age: The 5 Essentials” — in which she extracted key ideas from her book. What I really liked about her talk is the vast number of quotes from famous scholars, including Marshall McLuhan, Amit Ray, Frank Mot, Dave Gershgorn, RJ Steinberg, Radhika Dinks, Eliezer Yudkowsky, Bill Bullard, Sir Ken Robinson, and Kevin Kelly. Here are the 5 bullet points from her talk:

Acknowledge that no single one of us has all the answers.

Invite a wide diversity of voices into the conversation.

Value all living things and all forms of intelligence.

Encode values and empathy into technology.

Stay curious and embrace the uncertainty of life.

The last lightning talk was delivered by Solon Barocas, a Researcher in the New York City lab of Microsoft Research and an Assistant Professor in the Department of Information Science at Cornell University. He is also a Faculty Associate at the Berkman Klein Center for Internet and Society at Harvard University. His research explores ethical and policy issues in artificial intelligence, particularly fairness in machine learning, methods for bringing accountability to automated decision-making, and the privacy implications of inference. He is also well-known for organizing the ACM conference on FAT (Fairness, Accountability, and Transparency) in AI, which I learned about over the course of my recent summer internship that focused on machine learning explainability. Solon’s talk “Doing justice to fairness in machine learning” provided a brief introduction to machine learning fairness, which can be categorized as fairness as decision-making and fairness at the decision time. He also unpacked the main critiques of computational approaches to fairness, which include bloodless, naive, over-claiming/under-delivering, incremental, unaccountable, complicit, captured, and distracting. He argued that the politics of fairness invites criticism from different sides and fairness overall is more about policymaking than about decision making.

5 — Data Governance

This panel is moderated by Samantha Wu, the Data Policy Product Manager at Stae. The panelists include Madhulika Srikumar (Inlaks Scholar at Harvard Law School), Mary Madden (Research Lead at the Data & Society Research Institute), and Robin Berjon (Executive Director of Data Governance at The New York Times). The topic of this panel is data governance, which can be defined as the overall management of the availability, usability, integrity, and security of data used in an enterprise. It matters a lot for businesses as it ensures data is consistent and trustworthy.

To understand why data governance becomes a trendy topic now, the panelists raised 2 main points: (1) The rise of open-source software leads to unpredictable use cases, and (2) Businesses cannot innovate at the technical level if they do not innovate at the ethical level. So how does governance look like for data? Robin brought up the differences between good data governance (effectiveness) and fast data governance (efficiency) and provided some internal tools developed at The New York Times serving the purpose of harvesting readers’ intentions while assuring their agency. He also emphasized the need to build contextual and intellectual tools that can break down data governance into manageable chunks. Madhulika said that there have been models used to privatize the harm done to users, and she also suggested that we now can pull non-personal/community data from private companies and direct them for other purposes.

Conclusion

I had a ton of fun meeting and learning from people in different professions and backgrounds during this event. More importantly, the event exposed me to the ethical side of technology, which is sometimes lacking within the engineering and data science community where I belong. If you are motivated to join this community to co-create a more thoughtful future towards technology, I would highly suggest to check out AllTechIsHuman.org or get in touch with its founder, David Ryan Polgar. I look forward to sharing more on this type of content about the implication of technology in future blog posts.